How Close is AI to Taking Over the Role of the Instructional Designer?

The Results Part 2: Selecting an Instructional Strategy

Hello friends 👋

Welcome back to our three-part series exploring the impact of AI on instructional design.

Last week, we explored how well AI fares when writing learning objectives. If you missed it, you can catch up here.

This week, we're tackling a second task and a crucial aspect of instructional design: selecting instructional strategies.

The ability to select appropriate instructional strategies to achieve intended objectives is a mission-critical skill for any instructional designer. So, can AI help us do a good job of it?

Let's find out!

Quick Recap

Before we jump into the results, let's recap our experiment setup:

First, I selected three common ID tasks of varied complexity:

Writing learning objectives.

Selecting an instructional strategy.

Creating a course outline

Then, I enlisted three colleagues to complete these tasks using the same course design brief:

Colleague 1: An experienced instructional designer, who completed the tasks without any AI assistance. Hypothesis: Old school methods (no AI) are best.

Colleague 2: A “novice” colleague who isn’t an instructional designer and completed the three tasks with help from AI (ChatGPT 4.0 + Consensus GPT). Hypothesis: AI enables anyone to “do an ID’s job” effectively.

Colleague 3: An experienced instructional designer who is an instructional designer and completed the three tasks with help from AI (ChatGPT 4.0 + Consensus GPT). Hypothesis: AI enhances the speed and quality of experienced IDs' work.

Next, I asked my network to blind-score the quality of the outputs for each task and try to guess which were produced by expert ID, expert ID + AI and novice + AI.

Over 200 instructional designers from around the world took me up on my invitation - with some very interesting results.

In this week’s post I will dive into the results from task 1: writing learning objectives. This week, let's see how they performed in task 2: selecting the best-fit instructional strategy to meet a learning goal.

Task #2: Select an Instructional Strategy

A common complaint about AI is that AI makes for a poor sparring partner when developing a strategy. Why do so many people think this? The assumption is two fold:

1. AI can’t think creatively, and creativity is a component of strategic thinking.

2. AI doesn’t have the required domain expertise to reliably define problems and best-fit solutions (i.e. to think strategically).

I disagree.

My hypothesis is that strategising, i.e. defining problems and generating best fit solutions, is in fact where AI will eventually be our strongest ally. Why?

1. Because effectively defining a strategy is about both creativity and domain expertise, and AI (which is currently at its least powerful) is already showing an ability to master both of these things. Check out this study which found that AI can already outperform humans on creative tasks and these tools which show AI’s ability to access domain expertise.

2. Because effectively defining a strategy requires us to take a lot of data, analyse it and create hypotheses and recommendations. This is basically what AI is built to do.

Based on this, my prediction is that the instructional designer’s role will shift here to becoming very good at knowing what data to feed AI and what questions to ask it in order to, first, define the problem we’re trying to solve and, second, co-create the best-fit solution to the problem.

But where are we right now? Let’s dive into this week’s results.

Task #2: Select an Instructional Strategy

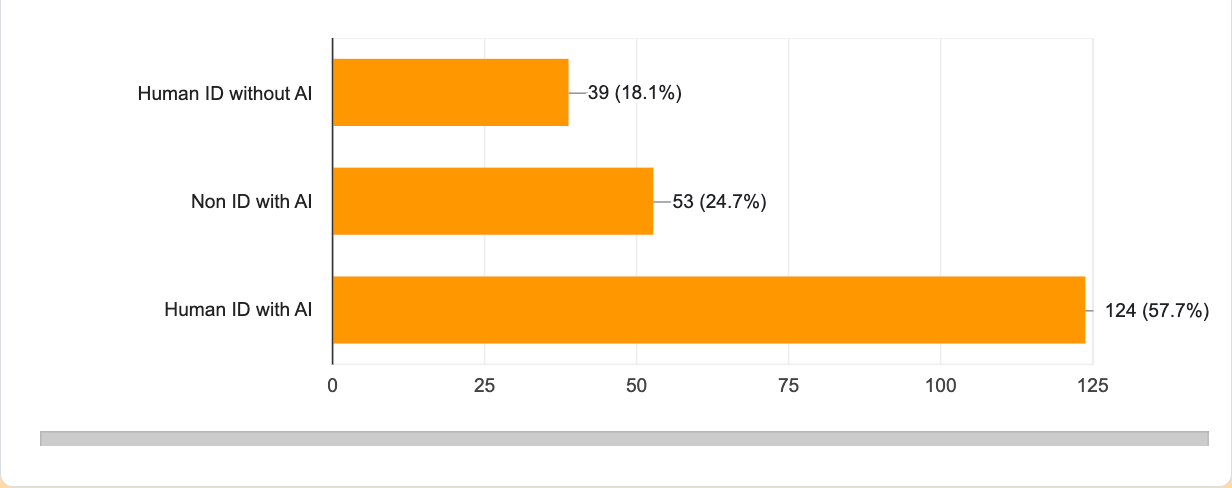

In this task, I asked ~200 instructional designers to blind-score the quality of the outputs for each task and try to guess which were produced by expert ID, expert ID + AI and novice + AI.

Here are the results.

🥉 Third Place: Instructional Designer (no AI)

Surprisingly (or perhaps not, given last week's results), our experienced ID working without AI came in last place. While they weren't considered to have done a terrible job, 50% of respondents rated their selected instructional strategy as poor or just okay.

Digging deeper into the feedback, I found some interesting insights:

Many testers felt the approach was too traditional and lacked engagement. According to one tester, "This strategy is every learner's nightmare. :) Sounds boring and ineffective."

The heavy reliance on direct instruction was criticised. A tester noted, "I don't think an experienced ID would focus on direct instruction only. They would know there needs to be multiple times of interaction/engagement."

Some saw it as outdated: "Old school thinking. I don't think many C21st ID would take this approach."

Interestingly, 30% of testers recognised the approach as standard in the industry, and therefore likely the work of an expert ID with no input from AI. This indicates a recognition among some that many professional IDs lack the necessary skills, knowledge and/or time to develop effective instructional strategies.

Despite this, poor performance in strategic thinking was primarily attributed to a lack of ID experience and expertise; 63% of respondents assumed that the lowest-performing strategy selection was the work of a novice + AI.

TLDR: when it comes to instructional strategy work, we may be overestimating the ability of experienced instructional designers and underestimating the potential for AI to assist us in producing work that is higher-quality than traditional, human-only approaches.

This suggests a similar bias to one we saw last week: when we see subpar work, we tend to blame AI or inexperience rather than considering that an experienced human might sometimes underperform.

🥈 Second Place: Novice + AI

The silver medal for instructional strategy design (I have definitely been watching too much Olympics coverage) goes to the non-expert using AI.

52% of respondents rated their strategy as very good

27% considered it exceptional

Not a single respondent rated it as poor

Testers appreciated several aspects of this strategy:

The focus on problem-based learning (PBL) was well-received, with one tester noting: "Great strategy. Learner-centred. Engaging with material."

The emphasis on practical application was also praised; "Sound strategy, allowing students to apply learnings in practical assignments, allowing for transfer."

The approach was seen by many as as promoting critical thinking and active learning - a strategy which we know

51% of respondents associated this better instructional strategy work with the work of an expert instructional designer working without AI. So again, we see an association made by some between high quality work and human-only effort.

Why did testers think this was the work of an experienced human ID? From the feedback provided by testers, several factors seem to have contributed to this perception:

Focus on Problem-Based Learning: The emphasis on PBL, a well-regarded instructional strategy, led many to believe an experienced ID was behind the approach.

Clear Rationale: The strategy provided clear explanations for its choices, which - again - testers associated with human expertise, rather than AI.

Practical Application: The focus on real-world application and engagement was seen as a hallmark of experienced ID work.

Only 27% correctly identified this as the work of a non-expert + AI. This misattribution suggests two interesting things:

We are underestimating AI’s domain knowledge when it comes to “pro” instructional design tasks like selecting instructional strategies.

We are overestimating human IDs’ ability when it comes to “pro” instructional design tasks like selecting instructional strategies.

AI has the potential to significantly enhance the capabilities of those new to the field, producing work that's considered to be on par with experienced professionals.

🥇 First Place: Instructional Designer + AI

The winning strategy, once again, was that produced by the experienced instructional designer working with AI. The results speak for themselves:

33% rated it as exceptional

43% rated this instructional strategy as very good

19% considered it good

"Spectacular. Covers all of ways to learn and drive behaviour change."

Testers were particularly impressed by two aspects of this strategy:

Research-Backed Strategies: The inclusion of citations and references to research was a big hit. A tester commented, "All the references to research makes me think Perplexity AI was leveraged here. I like how the approach is justified at the end -- it takes to a level I don't think an average human ID would without AI, and it certainly makes it more powerful as a recommendation."

Practical and Engaging: Testers also appreciated the focus on real-world application. One noted, "This strategy respects the knowledge and skills the learners would bring. The blended approach suggests that learners will be scaffolded and have some autonomy and choice."

Interestingly, the majority of respondents (58%) correctly identified this as the work of an expert ID assisted by AI. This reinforces the hypothesis that there’s a emerging view that AI can be a powerful tool in the hands of experienced professionals, enhancing rather than replacing human expertise.

As one tester observed: "Most AI generated feedback tends to provide options. Along with citing specific research, this 'feels' like AI directed solution. Different solutions and activities will appeal to wider audience of learning population based on their own strengths in learning."

For other respondents, the tell-tale signs of this being a human expert + AI were:

Research Citations: Many testers associated the inclusion of research references with AI capabilities, but the strategic application of this research was attributed to human expertise.

Practical Knowledge + Theoretical Backing: The combination of practical, experience-based insights with theoretical foundations was seen as a hallmark of human-AI collaboration.

Structured Presentation: The clear structure and formatting were often associated with AI, while the overall strategic approach was attributed to human expertise.

This perception aligns with what seems to be an emerging reality: the most effective instructional design may come from a synergy between human experience and domain expertise enhanced by AI’s capabilities.

Key Take Aways So Far

Zooming out and looking at both last week’s and this week’s tasks, some interesting themes are starting to emerge: analysing the results of this task, several themes are starting to emerge emerge:

The power of human + AI collaboration: Consistently across both tasks, the combination of an experienced ID with AI produced what were considered by experts to be the best results. This may suggests that the future of instructional design might lie in effective human-AI collaboration rather than in AI replacement of human roles.

Mixed feelings on AI: Across both tasks, we've seen some respondents associate poor quality with the use of AI, suggesting a lack of confidence in AI’s ability to enhance our day to day work.

AI as an equaliser: In both tasks, we saw that AI assistance helped novices produce work that was often indistinguishable from, or even superior to, that of experienced IDs working alone. This indicates that AI tools have the potential to significantly level the playing field in the field of instructional design. This aligns with research by Harvard and Boston Consulting Group, which found that AI had the most significant positive impact on both efficiency and effectiveness when used by novices.

Looking Ahead

Next week, we'll conclude this project by analysing how AI fared in a final, complex task that many IDs consider to be at the heart of their craft: creating course outlines.

Subscribe and stay tuned for the final instalment. In the meantime, I'd love to hear your thoughts:

How do these results align with your own experiences using AI in instructional design?

Are you seeing similar synergies in your work, or have you encountered different challenges?

Share your thoughts via the related LinkedIn post.

Happy experimenting!

Phil 👋

PS: Want to work with me and learn how to integrate AI into your day to day ID work? Apply for a place on my AI-Powered Learning Design Bootcamp. Spots for 2024 are filling up fast!