Many of us educators hoped that ChatGPT would operate like The Librarian from the sci-fi novel, Snow Crash. In the novel, The Librarian is an AI-powered super-machine capable of accessing and summarising reliable, peer-reviewed research on demand.

In reality, ChatGPT isn’t the powerful librarian and research assistant we hoped it would be.

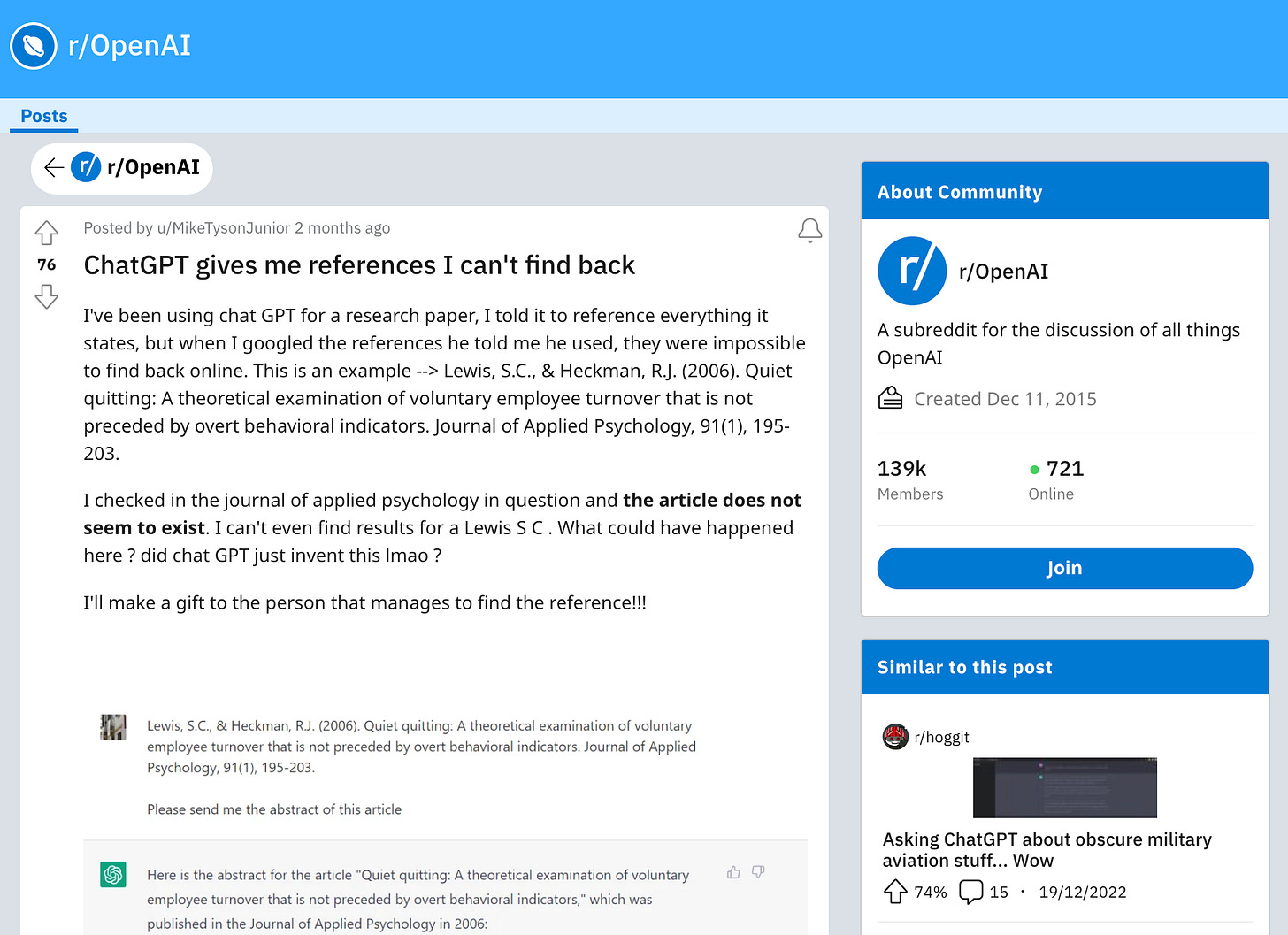

As many educators have pointed out, ChatGPT’s citing capabilities are not great. Errors in encoding and decoding between text and representations mean that in some cases ChatGPT mis-cites references. In some cases, it simply makes references up (more on this below).

OpenAI is keenly aware of “the hallucination problem”. They and many others are actively working to improve the quality and reliability of Large Language Models (LLMs) and instruction-following functionality to try to solve the problem.

In the meantime, what can we do - if anything - to improve the quality & reliability of the references ChatGPT cites?

This is the question I asked myself earlier this week, and here’s the story so far.

Experiment 1: Prompt Structure

ChatGPT likes structure. My first test was to see whether more structured prompting would generate more reliable references. I did this by writing a structured prompt which gave ChatGPT a specific role, task and instructions.

The Prompt:

ChatGPT’s Response:

On first glance, the results are pretty exciting: six references on the topic I requested.

But wait.

On closer inspection, ~80% of the sources were made-up. This was the average result across a number of topics using this prompt. Not great.

Lessons Learned

Always assume that ChatGPT is wrong until you prove otherwise.

Validate everything (and require your students to validate everything too).

Google Scholar is a great tool for validating ChatGPT outputs rapidly.

Experiment 2: Source Specificity

Since I had validated The Reliability Problem, what if I added parameters which restricted ChatGPT’s output to only reliable sources?

The Prompt:

ChatGPT’s Response:

With a little help from Google Scholar, I was able to validate that 100% of the references generated using this prompt were real and reliable. Bingo!

But, hang on.

When I changed topic, the results were more varied. On average, this prompt generates ~75% reliability. Definitely an improvement, but definitely not perfect.

Side Note: in the process of this search, I also confirmed the well known fact that ChatGPT’s URLs are not reliable. That said, ChatGPT thrives on intentional repetition; requesting Google Scholar URLs is a helpful way to remind ChatGPT that it should focus only on this source [but ignore the links it provides].

Lessons Learned

The prompt works better when you provide a subject area, e.g. visual anthropology, and then a sub-topic, e.g. film making.

Ignore ChatGPT’s links - validate by searching for titles & authors, not URLs.

Use intentional repetition, e.g. of Google Scholar, to focus ChatGPT’s attention.

Be aware: ChatGPT’s outputs end at 2021. You need to fill in the blanks since then.

Experiment 3: Refinement

Now that I had the beginnings of a prompt formula for generating reliable results, what if I added additional parameters which a) somehow restricted ChatGPT’s output to highest-quality references b) made the outputs more useful?

The Prompt:

Note: I changed-up the topic from anthropology to accounting to start from a clean slate.

ChatGPT’s Response:

With a little help from Google Scholar, I was able to validate that 100% of the references generated using this prompt were real and reliable.

My research is not exhaustive or final (I need you!) but a mixed sample of topics and sub topics across a range disciplines has yielded ~98% accuracy. Bingo.

Lessons Learned:

The prompt works better when you provide a subject area, e.g. visual anthropology, and then a sub-topic, e.g. film making.

Ignore ChatGPT’s links - validate by searching for titles & authors, not URLs.

Be aware: ChatGPT’s outputs end at 2021. You need to fill in the blanks since then.

What Next? From Librarian > Professor

Of course, if we want reliable references we can just go straight to Google Scholar. The next stage of my research is to explore the implications of The Librarian for content & activity generation.

If we’re able to generate reliable references using ChatGPT as Librarian, what might we then be able to do with those references if we position ChatGPT as Professor? This is where the real magic starts to happen.

Here’s a sneak peak at some early experimentation with using ChatGPT to rapidly turn The Librarian’s expertise into reliable learning content.

Prompt:

ChatGPT Response:

Over to you! This is an ongoing experiment. I’d love you to try some or all of these prompts and share what works and what doesn’t work.

Let’s experiment and learn together!

Happy designing,

Phil 👋

Did you find any change in performance on this topic since GPT-4 came out?

Have you tried WebChatGPT on Chrome? It gives both chatgpt AND real sources. I tried it several times; most of the times the sources were good quality. Another approach would be to use Edge Dev mode, Bing AI. Or PerplexityAI. I think out students will not be limited by one tool.