Good Vs Great Online Course Design

A walkthrough of two real courses I redesigned using DOMS™️ + tips on how you can increase average retention levels from 6% to 87%

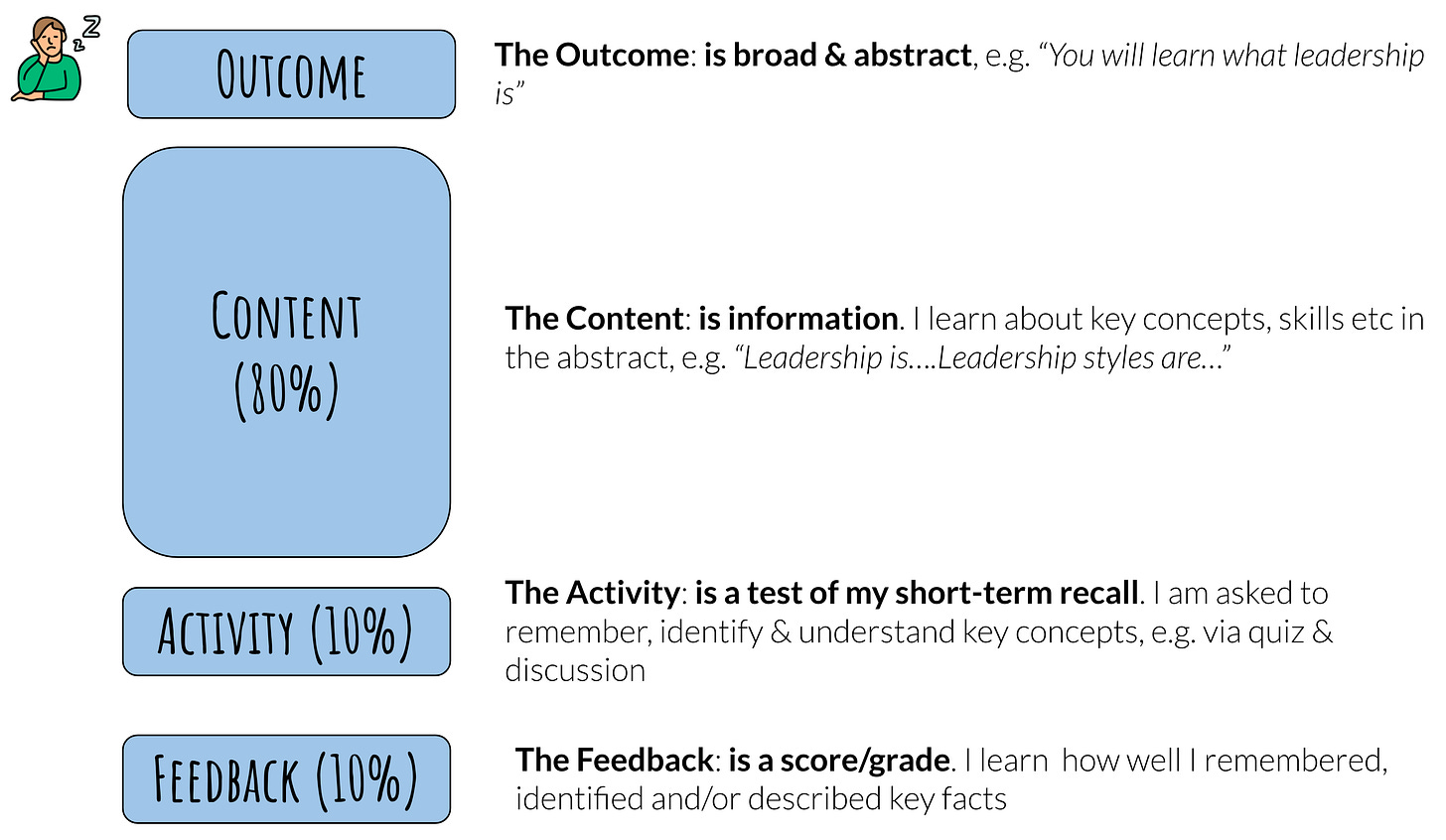

Look around the web and you’ll find there’s a formula for online course design. Typically, an online course will have an “80/10/10” structure, something like this:

The result? Learners are passive and at risk of experiencing cognitive overload.

In ~94% of cases, they drop out.

If the experience is mandatory (or if they’ve paid a lot of money for it), learners might complete the course through mindless box-ticking. In the process, they learn very little and leave frustrated.

What Does Great Online Course Look Design Like?

What does an online module look like when we optimise it for learner motivation & achievement using learning science research?

The result? Learners are active, motivated and cognitive load is managed.

In ~87% of cases, learners stay, persist & achieve.

Learners leave with new knowledge, skills and the confidence they need to apply these things in unsupported, real environments. You can learn more about this here.

Real-Life Examples

Below are two real-life examples from courses I have redesigned using the DOMS™️ principles and process.

In both cases:

the courses were optional (i.e. not mandatory) and paid-for (i.e. not free);

the redesigns delivered a ~10X increase in completion rates & satisfaction scores compared with the “before” design.

Example: Python 101

Before - 80/10/10 (~90 mins)

After - 10/80/10 (<1 hr) re-designed using DOMS™️

Example: Graphic Design 101

Before - 80/10/10 (~90 mins)

After - 10/80/10 (< 1hr) re-designed using DOMS™️

Key Take Aways

When designing your course, keep the following DOMS™️ Design Principles front of mind:

Manage Cognitive Load: Our working memories have a limited capacity. Learners learn better when information - including learning content - is minimised & presented in a way that doesn’t unnecessarily tax working memory. Use minimal viable content and emphasis action (activity) over consumption (content).

Learn, Practice & Assess in Context: Learners learn best when the experience is as authentic or “close to real” as possible. As a rule, the most effective strategy is to select content, activities, assessments & modes which best replicate real scenarios & enable learners to apply & get feedback on their learning in context.

Optimise Outcomes: Learners learn better when all content & activity is aligned to clear learning outcomes. The wording & structure of outcomes along with their clarity of purpose helps the learner understand why this is worth their effort. Keeping outcomes short and focused (achievable in <1hr) helps to drive a sense of mastery and motivation.

I’d love to hear your thoughts, challenges & questions on the DOMS™️ 10/80/10 Framework - please say hi and drop your comments and questions below.

Happy designing! 👋

Want to learn & try the DOMS™️ process for yourself? Apply for a place on my learning science bootcamp, a three week, hands-on experience where we work together to design a course of your choice using DOMS™️.

The next cohort kicks off September 19th.

You can learn more about the bootcamp & see some example content here or jump straight in and apply now using the button below.

Great post again and thanks for sharing.

We passed it round the Keypath office and some questions came back like, what happened to the graded assessment/knowledge check? What about low level blooms outcomes like, "recite" or "classify"?

Is the assessment now the feedback, or is there an important distinction we missed?

Are low level blooms knowledge checks built into the activities or are they part of feedback? And are we seeing the model too linearly in trying to split them apart and actually activities and feedback are more blurred?

Or did we miss the point :P

Thanks again Dr! DanC

Fantastic post as always - I'm missing one key takeaway - and one reason why a framework like this isn't currently 'leading' the market (volume wise). You need to provide:

- additional functionality / link out to platforms that allow you to practice vs. just play back a video + MCQs - of course it's doable, but takes more time, expertise and possibly cost

- if feedback is delivered via screenshare + walkthrough -it means it's delivered to a person. If it's through social learning - you 'save' on instructor costs but need to provide, manage and moderate the platform. If it's an instructor, you need to pay that person.

So would the revised courses not be both:

- more expensive

- less scalable?

Is this the right approach? Yes. We very much believe in (a version of) this approach at my company, so I'm playing a bit of devil's advocate here! Am I surprised that this isn't the standard design? Not really, partly for those reasons (instructor / reviewer cost + expertise and confidence using different platforms).