Make Instructional Design Fun Again with AI Agents

A special edition practical guide to selecting & building AI agents for instructional design and L&D

Hi Folks!

What’s magical about instructional design and L&D is its boundless scope—every day we might complete a dizzying range of tasks, from psychology to UX, from project management and budgeting to storytelling and data analysis.

What's maddening about instructional design and L&D is... its boundless scope.

From writing surveys and drafting scripts to aligning with compliance standards, managing SME expectations, and QA'ing 73 versions of a learning module, as instructional designers our plates are overflowing with critical but often tedious tasks.

While we'd rather be immersed in pedagogical conversations or reimagining learning experiences, we're often stuck in the weeds of production, admin and project management.

In the last couple of years we have evolved from a narrative of “AI will take your job” to a narrative which centres more around the idea of the human-AI hybrid—the "cyborg" instructional designer who stays creative and strategic by "delegating the small stuff" to AI.

Exactly how we do this has been less clear, but — fuelled by the rise of so-called “Agentic AI” — more and more instructional designers ask me: "What exactly can I delegate to AI agents, and how do I start?"

In this week's post, I share my thoughts on exactly what instructional design tasks can be delegated to AI agents, and provide a step-by-step approach to building and testing your first AI agent.

Here’s a sneak peak….

Let's dive in! 🚀

What Is an AI Agent?

There’s actually a lot of debate here and not 100% alignment on exactly what an agent actually is and does. For this purposes of this exercise, think of agents as tireless, low-risk interns that can handle routine tasks.

Unlike basic AI chatbots or tools that only respond when prompted, AI agents are proactive systems that monitor, analyse, and act on information based on instructions you've set up in advance.

In practice, AI agents:

Act proactively (e.g., check for updates or feedback daily)

Make simple decisions (e.g., flag a survey response as "negative sentiment")

Pull from live data (e.g., Slack posts or LMS logs)

Take real-world action (e.g., update a Google Sheet, send a summary to email/Slack)

Learn from feedback loops (e.g., revise their behaviour based on your edits)

How Do You Build an Agent?

If like most instructional designers you regularly use tools ChatGPT, Copilot or Claude, you might be wondering: what's the difference between prompting an AI chatbot and building an AI agent?

The key distinction is that chatbots wait for you to ask questions, while agents proactively perform tasks on a schedule or in response to triggers. It's the difference between having an assistant who sits quietly until you call versus one who handles routine work automatically in the background.

Building an agent typically involves defining criteria across three key components:

1. Triggers — When should the agent run?

On a schedule (e.g. daily at 9am)

When something happens (e.g. new form submission)

After specific actions (e.g. when a course is published)

2. Instructions — What should the agent do?

Clear, detailed prompts similar to what you'd give ChatGPT

Access permissions to relevant data sources

Decision criteria for any judgments it needs to make

3. Outputs — What should the agent produce?

Where to send results (email, Slack, spreadsheet)

Format for the output (report, dashboard, summary)

Whether it needs approval before acting

In practice, the process feels similar to prompting ChatGPT, but with added structure and automation. Instead of manually pasting in data and copying out results, you're setting up a system that handles the entire workflow.

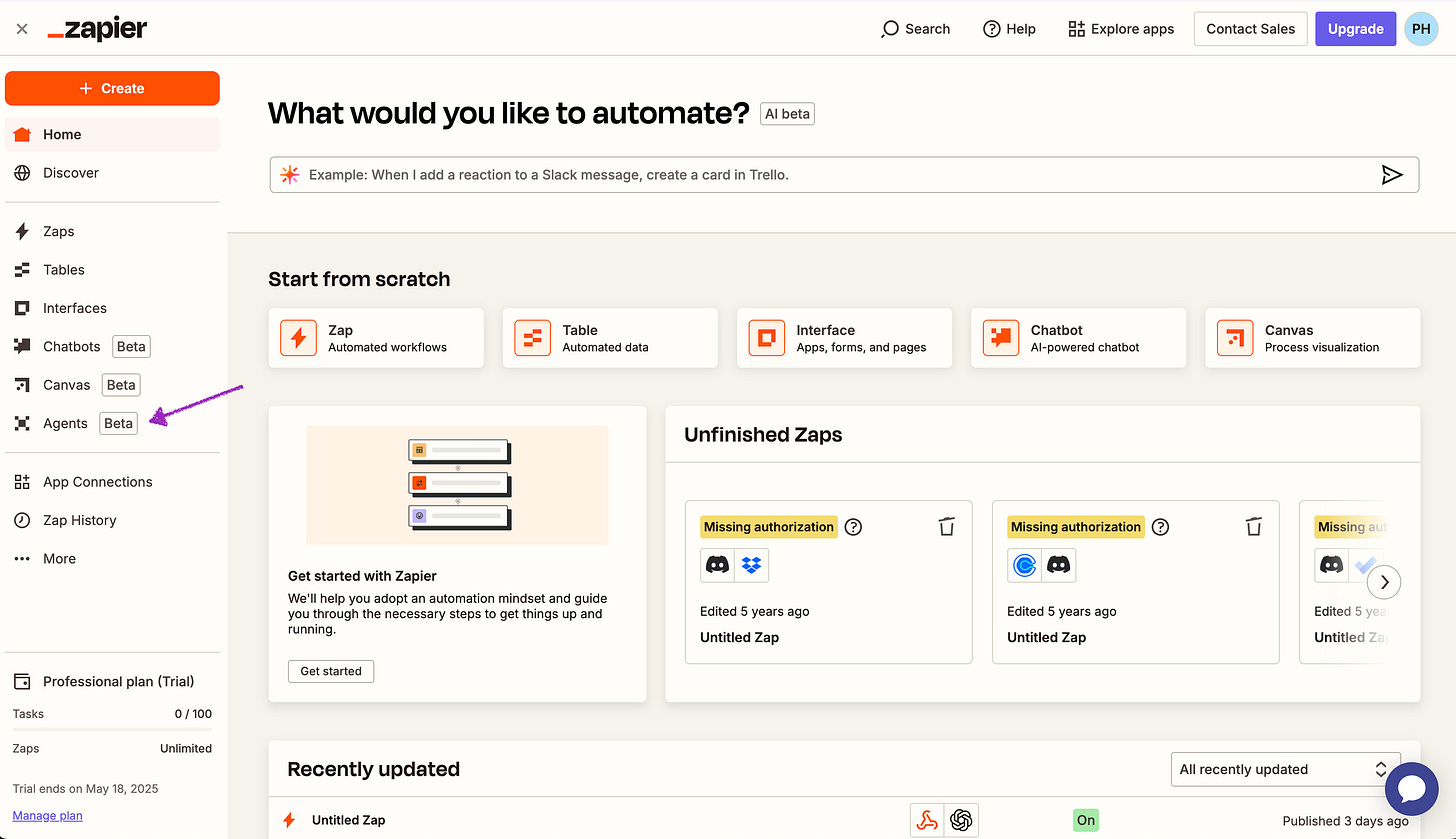

The good news is that you don't need to code to build an agent. Platforms like Zapier Agents, Make.com, Relay, and others provide visual interfaces where you can connect services, write instructions in plain English, and configure triggers and actions through dropdown menus.

The learning curve is steeper than chat-based AI, but far lower than traditional programming: think "filling out a complex form" rather than "writing code."

The good news is that once set up, these agents work tirelessly in the background while you focus on more meaningful work. Think: front-loaded effort with back-loaded benefits.

Selecting AI Agents for Instructional Design

Over the last couple of weeks, I’ve been testing which instructional design tasks can (and can't) be delegated effectively to AI agents.

The short version of my findings so far is that AI agents excel at handling routine, data-intensive, and pattern-based tasks that follow clear rules, but struggle with tasks which require pedagogical judgment and the integration of domain expertise and learning theory.

Here’s what I learned so far:

Some tasks are so structured and functional that they can be delegated & automated with minimal need for human checking and validation.

Other tasks can be delegated to an agent, but require careful checking to ensure the quality of outputs.

Some tasks require an amount of judgement and expertise which makes agentic automation risky without significant human intervention.

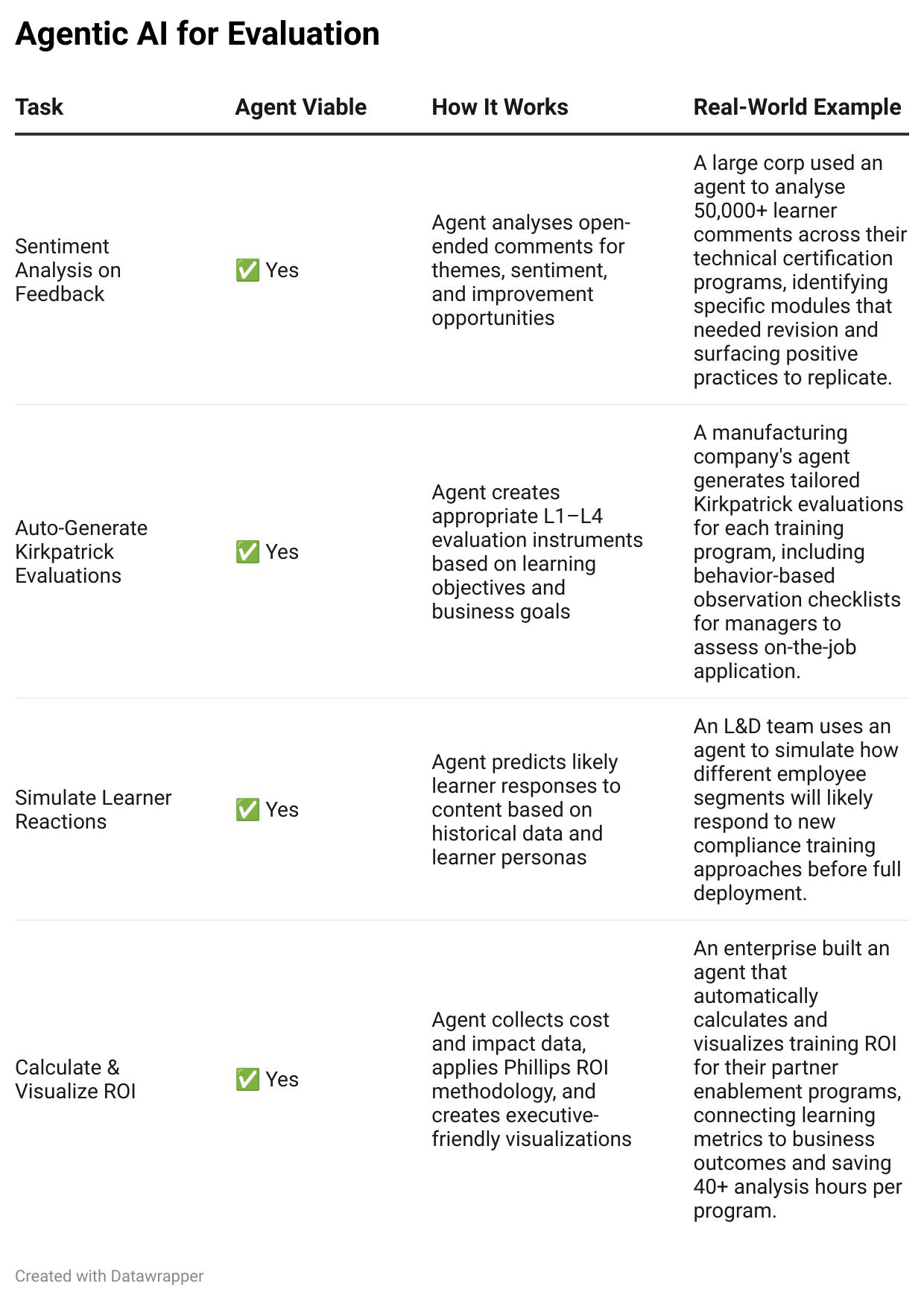

Here’s a summary of my findings:

Overall, emerging patterns suggests that AI agents excel at handling routine, data-intensive, and pattern-based tasks that follow clear rules, while humans remain essential for making or at the very least carefully checking strategic, creative, and pedagogical decisions that shape the learning experience at its core.

AI Agents In Practice

So what does all of this look like in practice? Let’s dive into some of the detail.

🔍 Analysis

The analysis stage is, of course, where we establish the foundation for all subsequent learning design. It includes identifying learner knowledge gaps, motivations, context and real performance challenges.

What can't be delegated: Your strategic judgment about what constitutes valid evidence, when training is actually the right solution, and how insights should shape design decisions. The critical analysis of whether a learning intervention is even necessary remains firmly human.

What AI agents can handle: The labor-intensive data collection and preliminary analysis—surfacing sentiment from Slack, simulating learner responses, categorising survey results, and even drafting initial research instruments.

✏️ Design

Design is the heart of instructional design: defining learning objectives, sequencing content, selecting instructional strategies, and choosing how learners will practice and be assessed.

What can't be delegated: Your instructional logic and empathy—knowing what to prioritise, what real-world relevance looks like, and how to scaffold learning effectively. The "soul" of good learning design comes from human experience and understanding of cognitive and emotional factors.

What AI agents can do: Support you by turning goal statements into Bloom-aligned objectives, generating skill maps, outlining module flows, and even drafting assessment items aligned to objectives.

🛠️ Development

The development phase brings the design to life. You're writing scripts, producing content, building prototypes, and often QA'ing every pixel of the final product.

What can't be delegated: Your voice, tone, and learner empathy—the craft that makes learning engaging rather than robotic. The intuitive understanding of when to simplify, when to challenge, and how to maintain the delicate balance between accessibility and rigour.

What AI agents can handle: Content generation, multimedia scripting, diagram creation, and quality assurance testing—the labor-intensive aspects that often drain creative energy.

📊 Implementation

Implementation is where your learning solution becomes a reality. You're coordinating launches, managing stakeholders, tracking enrolment, and handling the inevitable hiccups as learners engage with your content.

What can't be delegated: Strategic decisions about rollout timing, stakeholder alignment, and crisis management. When something goes wrong (and it always does), human judgment and relationships are irreplaceable.

What AI agents can handle: Repetitive communication, tracking, data collection, and technical troubleshooting—all the administrative tasks that take you away from supporting the actual learning.

📈 Evaluation

Evaluation is where you measure whether learning worked—across learner reactions, skills gained, on-the-job application, and business impact.

What can't be delegated: Defining what success looks like, interpreting complex data patterns, and making decisions about program revisions. The wisdom to know what the numbers actually mean for learner success requires human expertise.

What AI agents can handle: Data collection, initial analysis, report generation, and even predictive modelling to suggest improvements—turning evaluation from an afterthought into an ongoing, manageable process.

Building Your First ID Agent: A Step-by-Step Tutorial

Now that we've explored what's possible, let's build a simple but powerful agent that addresses a common pain point: gathering, analysing, and reporting on open-ended learner feedback.

This agent will monitor survey responses, categorise feedback by sentiment and theme, and send you a daily digest with actionable insights.

We'll use Zapier Agents for this tutorial since it's accessible, requires no coding, and integrates with common tools like Google Forms, SurveyMonkey, and Slack.

Step 1: Create Your Zapier Account & Start a New Agent

Sign up for a Zapier account if you don't have one (a free account will enable you to get started)

Go to Zapier Agents (not regular Zaps)

Click Create a custom agent and name it (e.g., "Learner Feedback Analyser")

Select Start from scratch

Step 2: Set Up Your Agent's Behaviour

Next you need to, create a behaviour.

A behaviour is a specific set of instructions that defines what an agent should do, when it should do it, and how it should process and respond to information.

Here’s an example:

Every day, look at all new form responses from my Google Form survey titled "Course Feedback" submitted in the last 24 hours. For each response, analyse the open-ended feedback questions and categorise them as follows:

1. Sentiment (Positive, Neutral, Negative)

2. Main theme (Content Clarity, Learning Activities, Technical Issues, Instructor Effectiveness, Course Pace, Assessment Difficulty, Other)

3. Priority level (High - requires immediate attention, Medium - should be addressed in next iteration, Low - minor suggestion)

Create a summary report that includes:

- Total number of responses

- Distribution of sentiment (e.g., "60% Positive, 30% Neutral, 10% Negative")

- Top 3 themes mentioned across all feedback

- All high-priority feedback items with direct quotes (prefix these with "URGENT:")

- 2-3 recommended actions based on the feedback patterns

For any feedback mentioning technical issues or content errors, extract the specific problem details and organise them by module/lesson.

Send this report to me via [LINK TO EMAIL, TEAMS or SLACK] as a direct message with the subject "Daily Learner Feedback Report - [CURRENT DATE]"

Include a note at the end of the message: "To see all raw feedback, visit [LINK TO GOOGLE SHEET]"Once you’ve added the instructions, hit create.

Step 3: Connect Your Survey Tool

Next, you need to connect your agent to your survey tool

If you don’t see your survey tool in the initial list of apps you can connect to your agent, skip the step

In the preview, select Insert Tools & add your survey tool, e.g. Google Forms

When setting up the app, always select “let the agent select this field” — this minimises the risks of errors

Step 4: Test & Activate

Click Save and then test behaviour to run a test with sample data

Review the output. If needed, adjust the instructions or data selection — the Zapier bot will help you with this

.When you’re satisfied, activate the agent and automate your process!

That's it! You now have an AI agent that automatically analyses feedback and delivers daily insights. Instead of spending hours manually reviewing comments, you'll start your day with actionable intelligence about what's working and what needs improvement.

Conclusion: The Human-AI Instructional Partnership

The main thing I’ve learned when playing with AI agents is that they’re not a golden bullet and aren't replacing instructional designers— but they are starting to transform how we work.

By handling structured, repetitive tasks and gathering data, agents enable us to focus on what matters most, make more informed decisions and deliver more value to our learners.

The most successful instructional designers of 2025 and beyond won't be those who resist AI, nor those who blindly embrace it and automate end to end processes. The winners will be those who learn how to collaborate with AI effectively, increasing not just their efficiency but also their effectiveness.

As instructional design teams flatten and workloads increase, AI agents offer us a chance to reclaim what drew us to this field in the first place: the joy of creating transformative learning experiences.

That said — as with any AI collaboration the key to getting high quality outputs relies ultimately on human skills, specifically:

1. Giving hyper-specific instructions

2. Setting clear expectations

3. Giving examples of what “good” looks like

4. Editing and evaluating outputs

Working well with AI “team mates” takes time, but once you get the instructions correct the impact can be huge.

Get building and share what you learn in the related LinkedIn post here.

Happy innovating!

Phil 👋

PS: Want to develop the AI skills needed for this new instructional design landscape? Apply for a place on my AI & Learning Design Bootcamp where we explore these concepts and build practical skills for the AI-powered future of learning.

Another great one Phil!