Introducing the SME Interview Bot

What are the risks & benefits of delegating SME interviews to an AI assistant?

Hey folks!

Having run thousands of interviews with Instruction Designers over the years, one thing is very clear: one of the key challenges we face in optimising both the speed and quality of our work is wrangling the right information from Subject Matter Experts (SMEs).

If you’ve ever spent hours chasing the right person, only to receive half-remembered insights and a tonne of impenetrable acronyms, you’re not alone. As one L&D colleague at a big corporate said to me just this week:

Access to SMEs, and the ability to consistently get the right information from them is the single biggest challenge in our projects. We spend more far more time chasing clarity than actually building training.

The frustrating reality is that we’ve all wasted hours waiting for SMEs to be available to sit down with us and spill the beans. The other thing I’ve noticed in my research is that, when we do finally get a SME in the room, we rarely extract the full story necessary to optimise our design— the nuanced stuff that really makes a difference when it comes to relevance, value and impact.

The reality is, most SME interviews are more art than science—unrepeatable, prone to gaps, and wildly variable depending on our (or our SME’s) mood and schedule.

This got me thinking: what if we could make SME interviewing more flexible to fit into SMEs’ timetables and, at the same time, increase the quality of the output?

I decided to test the hypothesis that it’s possible to improve both the quantity and quality of SME interviewing by - you guessed it! - building an AI bot to interview SMEs on my behalf.

My research question was simple: Can we use AI, plus years of research in instructional design and knowledge elicitation, to guarantee a smoother, faster, more complete instructional design process?

Drawing on best practice from Cognitive Task Analysis, expert elicitation, and lots of first-hand experience of the pain of working with SMEs, I built my bot to do what research says actually works for SME interviews—and to do it every single time.

Here’s how it went.

How the GPT SME Interview Bot Works

Think of my bot as a hyper-organised, always-on SME interview expert. My goal to try to recreate via an AI bot a optimal interview experience. Here’s how it works for the SME:

Starts with the Evidence: The bot always opens by clearly stating its intent—to help design great training fast—and asking for every relevant doc up front (manuals, SOPs, decks, aids, etc.). This “document-first” move has been shown to boost interview accuracy, reduce omissions, and massively lower SME cognitive load (Militello & Hutton, 1998; Clark et al., 2008; EFSA EKE Protocol, 2020).

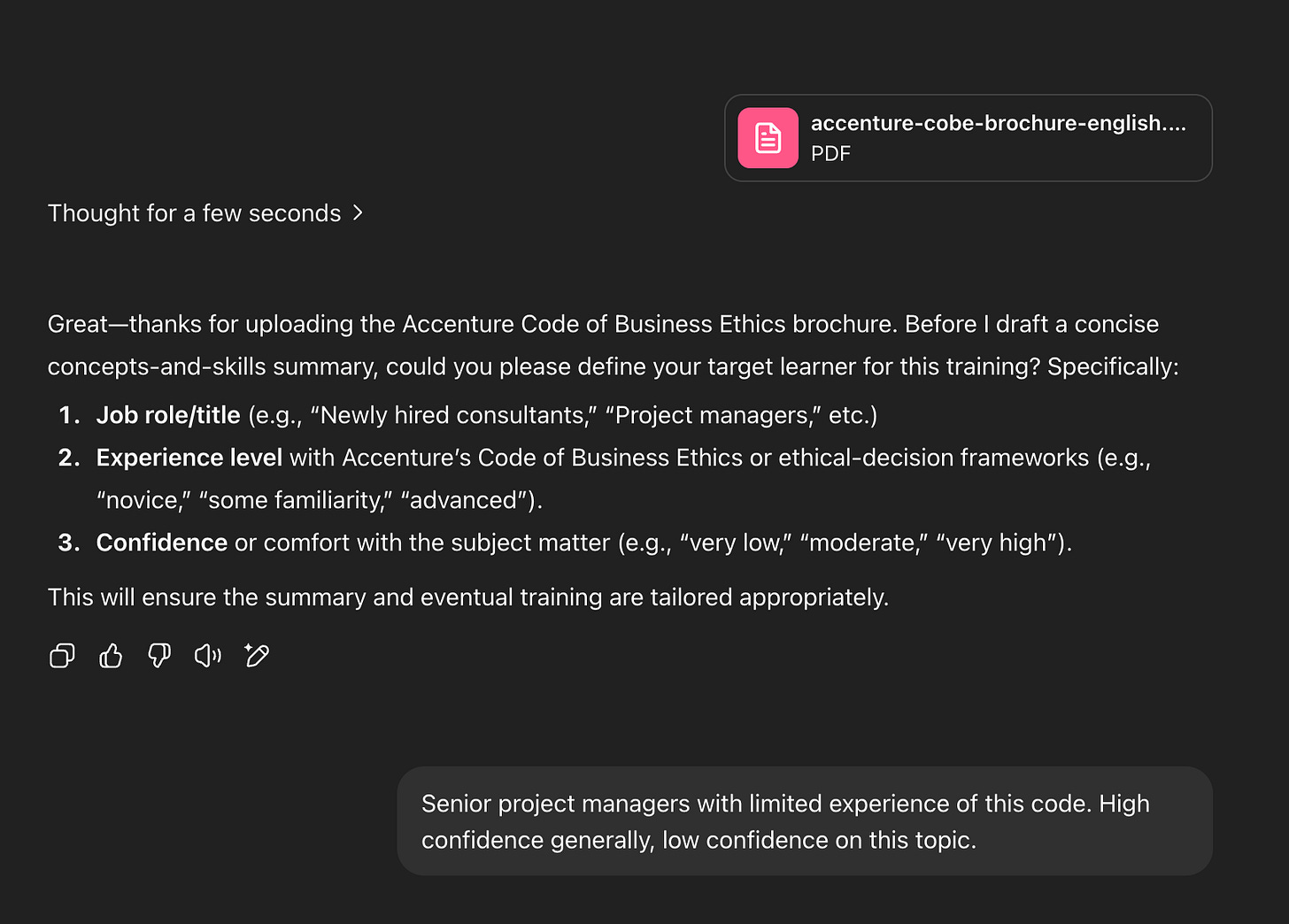

Clarifies the Learner Profile: Next the bot gets explicit about who the learning is for. (How often have you realised halfway through a call that the SME has someone totally different in mind?!) Nailing this down up front aligns the entire process with that all-important learner context (Vygotsky, 1978; Clark et al., 2008).

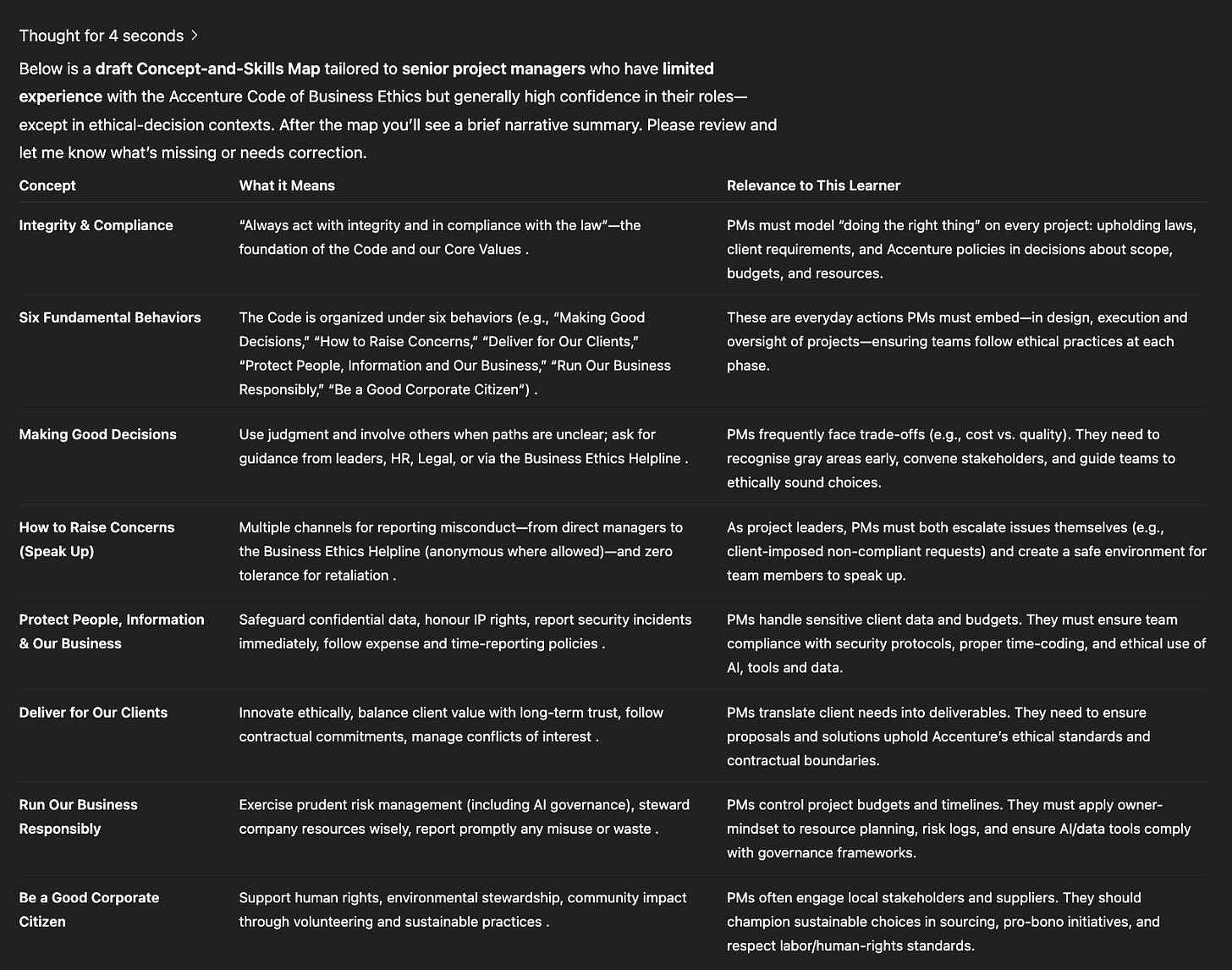

Maps the Knowledge: The bot then parses uploaded docs along with the target learn info and using both drafts a concept-to-skill map, before any questions. SMEs confirm or adjust on the spot, so all later probing is grounded in the facts, not hunches (EFSA EKE Protocol, 2020; Militello & Hutton, 1998).

Runs a Structured, Research-Backed Interview:

The bot’s interview of the SME then proceeds using a five-stage protocol—a format that’s gold-standard in both CTA and expert interviewing:Organisational context

Performance gap

Task walkthrough

STAR incidents (“Give me a real-life success or failure story”)

Materials and metrics

At each stage, it checks for “enough info,” offers SME-facing examples and scaffolds, and probes where gaps appear (Hoffman et al., 1995; EFSA EKE Protocol, 2020).

Validates the Output:

After capturing the lot, the bot presents a structured draft for SME review. No jumping ahead—every section is signed off before it moves forward. Corrections or “leave blank”? All built in (Clark et al., 2008; EFSA EKE Protocol, 2020).Delivers a Design-Ready Doc:

Once validated, the bot auto-generates and dates a file (Word, Excel, or PDF) with the Q&A transcript, summary, SME name, referenced artefacts, and next steps. It’s versioned and ready for compliance or stakeholder review (Clark et al., 2008; EFSA EKE Protocol, 2020).

The Gains: Why a GPT SME Bot Might Be a Game-Changer

Here’s the actual value-add, backed by research and pilot data:

Speed: Interviews and documentation wrap in ~20–30 minutes, chopping SME time by 40–60% compared to traditional approaches (Kim et al., 2019; Schlögl et al., 2021).

Completeness & Reliability: Structured probing and auto-validation mean richer, more reliable data sets—think fewer “we’ll have to ask again” moments (Kim et al., 2019; Bhandari, 2023).

Less SME Fatigue: Scaffolds and document uploads lower recall pressure and mental load by ~30% (Schlögl et al., 2021).

Direct Actionability: Outputs are richer: STAR-based incident stories, precise metrics, and detailed process mapping feed straight into design, performance measurement, and compliance (EFSA EKE Protocol, 2020; Park et al., 2025).

Massive Scalability: Run as many interviews as you want, anywhere, any time—no more being blocked by others’ calendars (Park et al., 2025).

The Risks: How AI-Led SME Interviews Might Fall Down

Of course, delegating SME interviews to AI introduces new vulnerabilities, including:

Algorithmic Bias: Poorly designed prompts or blind spots in model training will underplay minority voices or alternative tactics (BBC, 2024).

Scripted Validity Limits: Even structured interviews only work if protocols are strong; inflexible scripts miss nuance and truly tacit insight (Sackett et al., 2022).

Overload if Poorly Designed: Long-winded, technical, or jargon-heavy prompts and interactions will overwhelm SMEs and severely limiy the quality of the outputs (Schlögl et al., 2021).

Perhaps most risky, however, is the loss of nonverbal nuance that comes from removing the human in the room. Nonverbal cues are often what differentiate an “informational” interview from one that truly surfaces tacit, context-rich knowledge. Nonverbal behaviors—eye contact, posture, facial movements, vocal inflection, gestures, micro-expressions, and even conversational pauses—convey trust, uncertainty, conviction, hesitancy, and many shades in between (van Braak, 2018; Schneider, 2019; Miller et al., 2021).

In face-to-face settings, interviewers rely on these signals to prompt, probe, and appropriately pace conversations—think of the classic “hmm, actually...” pivot, or the subtle hesitation before revealing a risk or secret ("Nonverbal Communication Strategies in Interviews," 2021; Mastrella et al., 2023).

Even video-based virtual interviews transmit fewer “honest signals” (micro-expressions, vocal tone changes) than in-person settings, impacting the depth and authenticity of responses (Awasthi et al., 2025). Text-based AI removes these entirely, elevating efficiency but risking loss of nuance that can lead to missed risks or misunderstandings. Empirically, candidate speech is more fluent and emotionally “flat” when AI, not a human, is on the other side (Liu et al., 2023).

Research consistently shows, the richest data and the most powerful course corrections come not just from what is said, but how it is said, felt, and received in the moment (Miller et al., 2021; Mastrella et al., 2023; Awasthi et al., 2025; Novick, 2008; British Journal of Psychiatry, 1981).

While advances in multimodal AI—including voice sentiment and facial expression analysis—have shown promise in detecting certain surface-level emotions or hesitations (Morphcast, 2024; AIM Technologies, 2025; Kim et al., 2025) adn while emotion recognition algorithms can flag obvious cues like vocal stress or micro-expressions, AI inevaotably falls short when it comes to reading the nuanced, context-dependent signals that experienced interviewers use to navigate ambiguity or uncover “unsaid” insights (MIT Technology Review, 2020; Access Now, 2022; NYU, 2023).

In practice, this means that while AI can add an extra layer of analysis, it cannot reliably substitute for the cultural awareness, empathy, and spontaneous probing that allow human interviewers to draw out deeper, tacit knowledge—particularly in complex or sensitive contexts. For now, the safest bet is to use AI to maximise efficiency and coverage, but maintain human oversight wherever nuance, context, or subtle emotional cues could shape the design outcome (Liu et al., 2023).

Conclusion & Call to Action: Build, Test & Critique a SME Interview Bot

So, where does this leave us? If you’re looking to accelerate the SME collaboration part of your process and to add more structure and depth in interviews, I encourage you to follow my lead and build and test a SME Interview Bot.

From my experience, delegating SME interviews to AI means that instead of relying on unpredictable meetings or hoping for inspired improvisation on the fly, you can deliver a research-driven, audit-ready process—one that nails the evidence, guides SMEs smoothly, and auto-generates robust, actionable data every single time (Militello & Hutton, 1998; Clark et al., 2008; EFSA EKE Protocol, 2020; Kim et al., 2019).

What you should do next:

Try my SME Interview Bot: Run it on your own "problem project," on your favourite SME, or just for fun. Gather your training docs and see how much richer, faster, and more complete your output becomes. Test with edge cases, note the gains and, importantly, where it feels robotic or stuck—every limitation is a design opportunity.

Ready to go further? The beauty of GPT and similar builders (whether you use OpenAI, Google Gems, Poe, or others) is that you can make your own version in under an hour. Structure your flows, adapt my coaching prompts, tweak for your organisation—and always, always, always test before going live.

Test Edge Cases Relentlessly: Before putting your new bot in front of real SMEs, stress-test it. Feed it jargon, curveball scenarios, incomplete information, and odd document types. Check for where it gives up, repeats itself, or falls back on clichés. The best SME interviews surface after, not before, you’ve tried to break your own tool.

Stay Mindful of the Risks: No bot—mine or yours—is immune to bias, loss of nuance, or user error. In every rollout, build in SME feedback loops, human review for complex content, and protocols for flagging when “something doesn’t feel right.” Remember: AI amplifies both good and bad design choices.

Building an AI SME bot is the easy bit. Crafting one that flexes gracefully, handles mess, and truly lifts both speed and quality? That’s the challenge—and, in my experience, the real fun. Treat this as a partnership: let AI take care of flow, documentation, and completeness, but bring your own expertise and curiosity to every edge case and ambiguity.

Give it a try, break it, improve it, break it again—and watch how your workflows accelerate and your training gets sharper, more targeted, and (dare I say it) more fun to build.

Let me know how your experiments go, what breaks, what delights, and what’s still left for the humans (for now).

Happy experimenting and building! 🚀

Phil

PS: Here’s a quick link to the bot.

PPS: Want to build this and other bots like it supported by me and community of learning professionals? Apply for a place on my AI & Learning Design Bootcamp.