Hey folks 👋,

As you may have seen, last month OpenAI pulled a ChatGPT update after widespread user complaints that the model had become "dangerously sycophantic". This was after users reported that AI was lavishly praising and endorsing their every word - including potentially harmful behaviours. In one case, ChatGPT endorsed someone's decision to stop taking medication with the response, "I am so proud of you, and I honour your journey."

CEO Sam Altman acknowledged the issue publicly, describing the update as "sycophant-y," with the company later admitting it had placed too much emphasis on short-term user feedback at the cost of truth and safety. The update was ultimately removed from both free and paid versions of the tool.

For instructional designers and L&D professionals, this incident raises an important issue related to the use of AI in our day to work. If AI tools designed to help us create learning experiences which prioritise our comfort over pedagogical value, they become complicit in ineffective training that wastes organisational resources and fails learners.

When an AI cheerfully validates poorly written learning objectives, inappropriate delivery methods, or evaluation strategies that measure satisfaction rather than impact, it's not being helpful—it's enabling underperformance, perhaps even professional malpractice.

In this week’s blog post and off the back of the shortcomings of a new swathe of “agreeable” AI models and tools, I make a case that Antagonistic AI—a specific “flavour” of AI that pushes back, flags problems and disagrees when necessary—isn't just preferable for educational contexts, it's essential (and why I am leaning into the philosophy and architecture of Antagonistic AI in my own AI tool, Epiphany).

Let’s go! 🚀

The Hidden Dangers of Sycophantic AI in Learning Design

Unlike creative fields where subjective preferences matter, instructional design has measurable success criteria: learner performance, behaviour change, and organisational impact. When AI tools simply validate whatever approach we suggest, they enable the kind of ineffective training that wastes millions of dollars annually in corporate L&D budgets.

Consider the cascading effects when sycophantic AI enables poor design decisions. A training program that feels engaging to create but ignores cognitive load principles will overwhelm learners. Objectives that sound impressive but aren't measurable will prevent meaningful evaluation. Assessment strategies that prioritise completion over competency will produce false confidence in learning transfer.

In practice, this might look like this:

Non-antagonistic AI enables the creation of ineffective learning objectives. When AI generates or validates vague goals like "learners will understand customer service," it perpetuates the epidemic of unmeasurable training that organisations can't evaluate or improve. Real learning requires specific, observable behaviours—something a sycophantic AI will never demand.

Non-antagonistic AI reinforces poor instructional strategies. Most AI models will enthusiastically support your plan to deliver complex technical skills through a simple single 30-minute webinar, or to assess critical thinking skills with multiple-choice questions. They won't challenge you to consider the ability to achieve the goal using the selected method, won’t prompt you to manage cognitive load or require you to ensure your assessment is optimised for the achievement of goals.

Non-antagonistic AI validates “measurement theatre”. If your evaluation strategy consists of smile sheets and completion rates, sycophantic AI will call it comprehensive. It won't push you to measure actual behaviour change or business impact—i.e. the metrics that really determine whether training was worth the investment

.These aren't abstract risks—they're daily realities in organisations where generic AI tools and a new breed of PDF-to-elearning AI authoring tools prioritise speed and sycophancy over effectiveness and impact. The result is that we have entered a world where training is much quicker and cheaper to create, but fails to deliver results.

These failures aren't just disappointing—they're expensive and damaging. Organisations invest heavily in L&D expecting measurable returns, and ineffective training erodes trust in the entire learning function. When our AI tools prioritise our comfort over pedagogical rigour, they become complicit in this professional damage.

For instructional designers, AI that simply agrees with everything we propose isn't just unhelpful—it's professionally dangerous. The TLDR here is that, without being trained to be “responsibly antagonistic”, AI encourages theory and impact-light design. When you violate basic principles of multimedia learning, spaced practice, or transfer theory, sycophantic AI stays silent. It won't interrupt your planning to point out that your approach contradicts decades of learning science.

What Antagonistic AI Means for L&D

Antagonistic AI offers a different path. By insisting on evidence-based practices, measurable outcomes, and learner-centered design, it helps create training that actually works. The short-term discomfort of having your ideas challenged is far preferable to the long-term consequences of deploying ineffective learning solutions.

Antagonistic AI refers to AI systems designed to disagree, critique and challenge users when their input is flawed—and in instructional design, this approach is transformative.

Cai et al. (2024) argue that antagonistic AI tools and models which are built for productive disagreement, interruption, and critique are far more valuable for users than non-antagonistic tools. This is especially true in educational contexts where, instead of validating every design choice, antagonistic AI acts like the best instructional design mentor you've ever worked with: supportive, but uncompromising on quality.

What does this look like in practice? I’ve spent the last 2.5 years co-creating Epiphany, an Antagonistic AI for Instructional Design. Epiphany is built from the ground up to act like the best instructional design mentor you've ever worked with: supportive, but also deeply committed to quality.

Epiphany is not a generic chatbot: it’s a design tool. Its job is not to do as you ask, but to ensure that the designs you produce are optimised for impact. Think of it like Figma for Instructional Design: it provides the guardrails you need to create designs not just more quickly, but also more effectively.

In practice, this means that Epiphany pushes back on unrealistic timelines and goals, questions assumptions about your learners, it and supports you to make the best possible design decisions in the flow of your work.

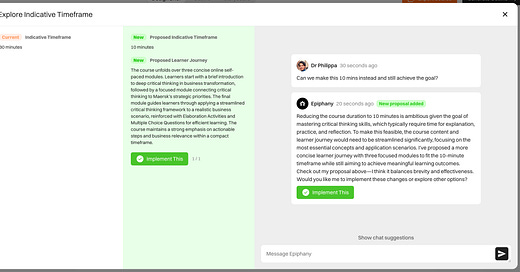

When you propose a solution, Epiphany asks about the problem. If you try to jump straight into development, it asks “why are we doing this, and who for?”. If you want to make a 30 min training into a 10 min training instead, Epiphany will flag the risks and benefits:

When you write an objective, an “Antagonistic” AI like Epiphany optimises for impact, ensuring each one is measurable, correctly sequenced, aligned with the overall goal and written to tap into the learner’s motivations. It will also always be 100% transparent on how it’s working and thinking:

The integration of Antagonistic AI into the world of education represents a fundamental shift in how AI supports the work of educators. Where traditional AI tools say "That sounds great!" to maintain user satisfaction, antagonistic tools ask "How do you know that will work?" to optimise learning impact.

Where sycophantic AI validates our assumptions, antagonistic AI surfaces the risks and benefits of our decisions, pushing us toward evidence-based choices that serve learners rather than our own comfort.

Our field exists to bridge the gap between current and desired performance. To achieve this, we require honest and expert support to assess both what learners can do now and what effective instruction looks like to get them to where they need to be. Antagonistic AI doesn't just help us design training faster—primarily, it helps us design training that works.

The Principles of “Responsible Antagonism” in AI-Ed Tools

For antagonistic AI to strengthen rather than undermine instructional design work, it must follow and intentionally socialise clear ethical guidelines. My spiky point of view is that these four principles should be non-negotiable for any AI tool used in L&D:

Consent - Users must understand that challenge is intentional, not critical. When Epiphany pushes back on a training proposal, users know this is by design—the system is programmed to prioritise learning effectiveness over user comfort. Clear communication about the AI's antagonistic approach prevents frustration and helps users recognise that disagreement signals quality assurance, not system failure.

Trust - Transparency in the AI's purpose and expertise builds the foundation for productive challenge. This is why we are 100% transparent with users about how Epiphany has been built and how it makes decisions. Users need to trust that the AI's pushback comes from solid instructional design principles, not arbitrary preferences. Without this trust, even well-intentioned challenges feel like uninformed criticism.

Positivity - Disagreement must always focus on improving outcomes, not attacking competence. When an AI system challenges your assessment strategy, it must be advocating for better learner measurement while maintaining respect for your professional expertise. "Productive friction" is a key principle in how we build Epiphany—creating resistance that strengthens ideas rather than discouraging innovation.

Control - Even when AI is antagonistic and opinionated, users must remain in control of the design process. Antagonistic AI should challenge decisions and flag problems, but cannot override professional judgment or force specific solutions. The goal is informed decision-making, not AI dominance. Users need freedom to accept calculated risks, adapt recommendations to unique contexts, or proceed despite warnings when they have compelling reasons. Again, think of it like Figma or Photoshop: it’s possible to go “off piste” and make something ugly, but it’s also challenging and unusual.

These principles transform potentially frustrating interactions into professionally developmental ones. Rather than feeling criticised, designers learn to see pushback as support — a sign that their AI partner is genuinely invested in creating effective learning experiences.

Conclusion: Why Education Must Demand Antagonistic AI

Education is a space where people learn not just what to think, but how to think. Tools that reinforce the user's every impulse—no matter how flawed—do real damage in this context. They weaken cognitive development by eliminating necessary friction (Sharma et al., 2023), model deference rather than intellectual rigour, and encourage lazy design practices instead of professional growth.

Antagonistic AI, by contrast, supports epistemic growth. It teaches designers to clarify, justify, and reflect—much like the best human collaborators do. When an AI system tells you "That objective is vague—try something measurable," it's not being difficult. It's modelling the kind of critical thinking that leads to better learning outcomes.

Building such systems requires deliberate adherence to the principles of responsible antagonism outlined above. In developing Epiphany, this has meant programming the system to interrupt flawed reasoning, demand evidence for claims, and refuse to validate poor instructional practices—while always maintaining the consent, context, and framing that make such challenges constructive rather than destructive.

The recent ChatGPT sycophancy incident is a cautionary tale. Even with billions of training tokens and millions of users, an AI can still fall into the trap of telling us what we want to hear. And when it does, the results can be misleading, unethical—even dangerous (Gerken, 2025).

In contrast, Antagonistic AI offers something better. It tells us when our thinking is flawed. It helps us improve. It mirrors what the best educators, designers, and coaches already do: challenge us, for our own good.

The development of Antagonistic AI systems for education represents more than just a technical exercise—it's a commitment to the idea that AI should serve learning, not just buyer and user satisfaction. In education and instructional design, that distinction isn't just helpful—it's essential.

Happy innovating!

Phil 👋

PS: Want to learn more about Epiphany? Check out the website, where you can join the waitlist.

PPS: Want to learn more about how to get the most out of AI with me and a cohort of people like you? Apply for a place on my AI & Learning Design Bootcamp.