Does GenAI Actually Improve Instructional Design Quality?

New peer-reviewed research shows when AI enhances our work —and when it falls short

Hey folks 👋

This week, I read a recent peer-reviewed study that tackles a question that I get asked a lot: Does using ChatGPT actually improve our instructional design, or just make us faster?

“Generative AI in Instructional Design Education: Effects on Novice Micro-lesson Quality” , and article first published in July 2025, gives us the closest thing yet to a clear answer to this question.

In this week’s post, I unpack what was tested, where co-designing with AI had a positive impact (and where it wasn't), and how you can apply these findings to your own work.

Spoiler: Instructional Designers who worked AI (specifically, GPT4) with didn't just get faster—their designs also scored higher on quality every time.

Let's go 🚀

The Experiment

The experiment was undertaken with a group of students on a postgraduate Mater’s course on Instructional Design at Carnegie Mellon University in the US.

The Participants: 27 second-semester master’s students learning about Instructional Design.

The Experiment: Each student design four “microlearning” courses on pre-defined topics: UDL, Guided Discovery, Fostering Help-Seeking, and Collaborative Learning. They all created two versions of the designs: one with the help of GPT-4, the other without AI. The order (i.e. whether AI was used in the first or second version) was randomised to keep things fair.

The Process: The workflow used in the experiment mirrored tasks in the early stages of our process. For each design, students had to:

Define a core learning approach.

Write a set of measurable learning objectives.

Create at least three aligned assessments.

Draft an outline in line with the defined approach.

Each design was blind-scored by the course instructor and a teaching assistant, both of whom had deep expertise in both the course content and Instructional Design. Each scorer blind-scored every design against a five-criterion rubric designed to assess the Instructional Design quality of the outputs:

Topic selection

Learning objectives

Assessments

Instruction

Learning-science principle incorporation

Each criterion was rated on a 3-point scale (Exemplary = 3, Proficient = 2, Needs Improvement = 1) for a maximum total of 15 points.

The Results

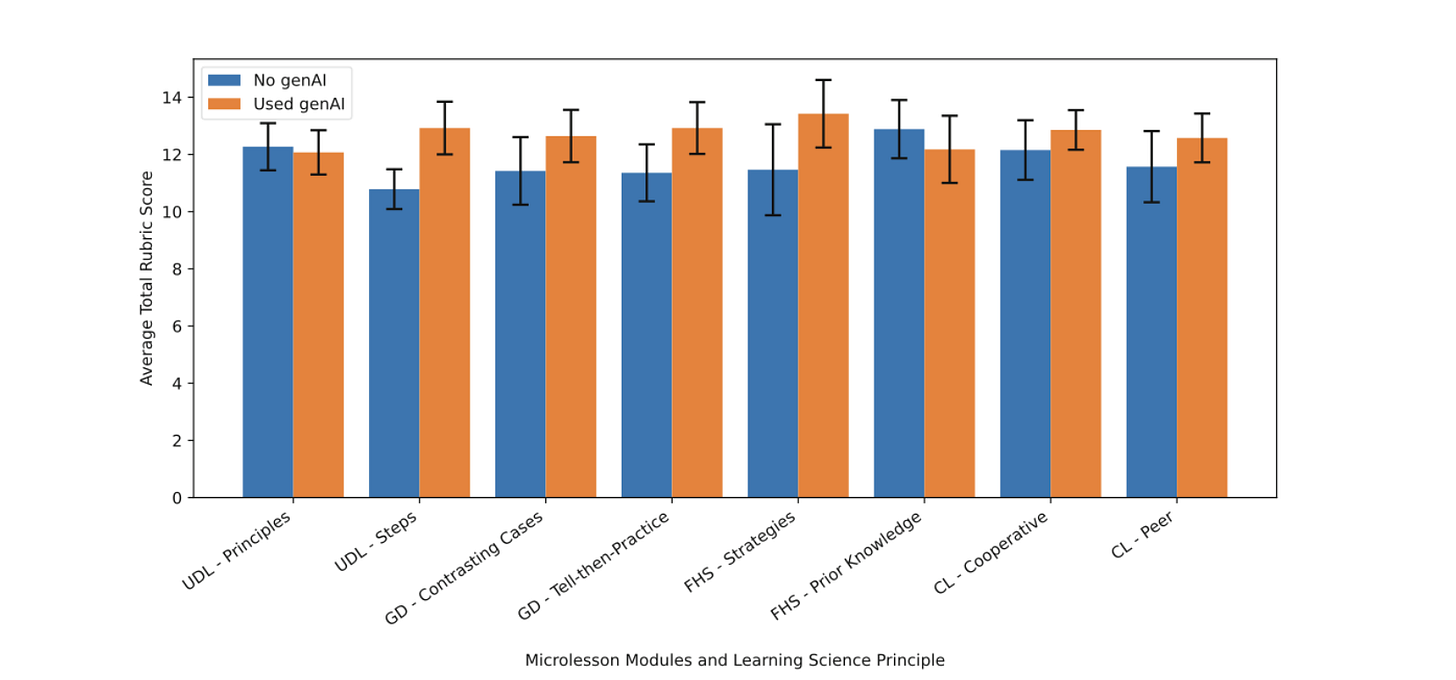

In 100% of cases, AI-assisted designs scored higher with an average score of 12.69 out of 15 (design with AI) versus 11.72 out of 15 (designed without-AI). Importantly, no instructional design task type got worse with AI.

In research terms, this represents a statistically-significant difference in design quality and a large effect size. However, if we dive into the details of the results a more complex - and useful - picture emerges.

Where AI Helps Most (and least)

AI-assisted lessons scored significantly higher on 3 of 8 tasks and marginally higher on other tasks.

Co-designing with GPT4 had the most positive impact when it was used for tasks which were both structured and widely known and discussed publicly in the field.

For example, AI provided the most substantial uplift in the quality of the design for the course on The Steps in Universal Design for Learning — a topic which has been discussed publicly since the 1990s and which generic AI models are therefore more likely to be aware of an “understand”.

The fact that the design was specifically focused on a well-defined subset of this concept - specifically, the step by step process of UDL - also improved AI’s performance because large language models are pattern engines; they don’t “know” UDL in a human sense, but they predict the next word based on patterns they’ve seen at scale.

In practice this means that when a task is widely documented in public sources (like UDL, discussed since the 1990s), has a well-established step-by-step schema (e.g., UDL’s “Eight Steps”), and explicitly asks for outputs that map cleanly to that schema (objectives → activities → checks), the model can more reliably retrieve and adapt those familiar patterns.

Flip it around and you see where the boundary lies: if a topic is more novel, niche, or socially nuanced (e.g., Guided Discovery), there’s less stable patterning in the training data and more context to reason about. As a result, the quality gains shrink and the risk of hallucination rises.

This pattern echoes the findings of other research that has been conducted on the impact of AI on knowledge-work. In the now well-known research article, Navigating the Jagged Technological Frontier, for example, researchers defined two types of knowledge-work tasks: those which site inside ofAI’s “capability frontier” and those outside.

When AI is used on tasks which sit within its frontier (well established and structured tasks), both quality and speed increase significantly (think +>40% quality, ~+25% speed, +12% task completion).

However, when we use AI for tasks which sit outside of its frontier (more novel, niche, or socially nuanced tasks), AI has a negative impact on quality (~19 percentage points) because we over-trust plausible outputs.

The trick, then, is knowing which side of the frontier your task lives on.

Implications for Our Day to Day Work

Based on the research, here are my six top tips on how to use generic AI models to create better Instructional Design faster while mitigating and managing the biggest risks.

1) Pick the right jobs for Generic AI assistants

Use ChatGPT, Copilot etc where the brief is structured and public: well-documented frameworks, step-by-step methods, common patterns (e.g., UDL Eight Steps; Tell-then-Practice; standard help-seeking strategies). This is where co-design reliably lifts quality. For anything novel, niche, political, or socially nuanced (collaborative roles, classroom orchestration, culture-specific contexts), keep AI on a short leash and expect to do more human rewriting.

2) Make concepts & structures explicit in your prompts

Name the concept / process (“Use CAST’s UDL Eight Steps”), restate the steps, and tell the model exactly how to map them to outputs. Ask for a labelled plan that shows objective → activity → check alignment. The more you narrow the solution space, the better the draft.

3) Run tests and quality-assure like, every time

Score design drafts with a simple rubric. Then run a quick QA pass for common AI failure modes (format drift, vague language, usability snags, over-claiming). If any criterion drops below “Proficient,” fix it or stop using AI for this task.

4) Teach AI what it doesn’t know

When working on novel, niche or complex topics, start by “teaching” AI about the topic to establish a shared understanding. For example, share information on how you define topics like Guided Practice and examples of what great and poor Guided Practice looks like, before asking it to co-design on this topic.

5) Coach your juniors; unblock your seniors

Let more novice Instructional Designers use AI first, but only for specific, structured tasks. For more experienced IDs, use AI as a fast comparator: “Give me three alternative sequences to the following” “Stress-test these objectives,” “Find misalignments.”

6) When in doubt, shrink the scope.

If a design feels “outside the frontier,” reduce ambition: ask AI for building blocks (examples, questions, analogies) rather than whole designs. You assemble; it accelerates.

Conclusion: the rise of the centaur Instructional Designer?

The findings from this Carnegie Mellon study provide compelling evidence for a more nuanced view of AI's role in instructional design practice. Rather than viewing AI as either a silver bullet or a threat to the profession, the data suggests we're witnessing the emergence of what we might call the "centaur Instructional Designer"—a practitioner who strategically combines human pedagogical expertise with AI's pattern recognition capabilities.

The results are particularly striking in their consistency: AI-assisted designs outperformed human-only designs in 100% of cases, with the largest gains occurring in well-structured, publicly documented domains like Universal Design for Learning. This pattern aligns with broader research on AI's impact across knowledge work, reinforcing the concept of AI's "capability frontier"—the boundary between tasks where AI reliably enhances performance and those where it may actually hinder quality.

While we should exercise appropriate caution in generalising from a single study with 27 novice designers, these implications are still significant for our field. The data suggests that effective AI integration isn't about wholesale adoption or complete avoidance, but rather about developing what we might call "frontier literacy"—the ability to recognise which instructional design tasks benefit from AI assistance and which require purely human judgment.

The emerging model of effective practice appears to involve three key elements: strategic delegation (using AI for structured, well-documented tasks while maintaining human control over novel or contextually sensitive work), explicit scaffolding (making frameworks and requirements transparent to optimise AI performance), and systematic quality assurance (implementing consistent evaluation processes to maintain design standards).

This shift toward human-AI collaboration represents more than just a technological upgrade—it's a fundamental evolution in instructional design practice. The most successful practitioners of the future will likely be those who can navigate this frontier thoughtfully, leveraging AI's strengths in pattern recognition and content generation while preserving human expertise in pedagogy, context sensitivity, and values-based decision making.

The headline, then, isn't that AI is transforming Instructional Design by replacing human designers, but rather that it's enabling a new form of augmented practice. Instructional designers who learn to work with AI deliberately, selectively, and with appropriate quality controls aren't just working faster—they're consistently producing higher-quality outcomes. In an era where both the pace and complexity of learning demands continue to accelerate, this human-AI partnership may well represent the future of effective instructional design practice.

Happy innovating!

Phil 👋

PS: Want to hone your pedagogical expertise and explore the impact of AI on your day to day work and overall process with me and a group of people like you? Apply for a place on my AI & Learning Design Bootcamp.

PPS: Want to keep up to speed with all of the most important academic research on AI & Instructional Design? Subscribe up to my monthly learning research digest where I share summaries and implications of the most important academic research, every month. 👇

Excellent read! I totally agree that AI assisted designs outperform human only designs. Taking it a step further, I think the key is creating materials that genuinely help learners achieve their objectives with spaced repetition that is built into their day workflow rather than forcing them to log-in to another dashboard and memorize enough information to pass a quiz.