Are ChatGPT, Claude & NotebookLM *Really* Disrupting Education?

Evaluating Gen AI's *real* impact on human learning

Hey friends! 👋

This week, I’ve been doing some research for a new academic article with the working title, “Evaluating the Use of AI Tools for Human Learning: Opportunities & Limitations”.

As AI tools like ChatGPT 4o, Claude 3.5 & NotebookLM are being increasingly positioned as “learning apps” and “education sensations”, my research asks: how much and how well do popular AI tools really support human learning and, in the process, disrupt education?

In this week’s post, I’ll share what I’ve tested and learned so far.

Lets go! 🚀

Methodology & Research Questions

I started by developing a simple method to rapidly analyse how well ChatGPT 4o, Claude 3.5 & NotebookLM handle and communicate educational content. I created a simple evaluation rubric to explore five key research questions:

1. Inclusion of Information

How effectively do AI tools summarise and explain core concepts, themes, methods etc in the input materials?

2. Exclusion of Information

What, if anything, do AI tools exclude in the process of summarisation and explanation?

3. [De]Emphasis of Information

What sorts of information and ideas, if any, do the AI tools emphasise and de-emphasise?

4. Structure & Flow

How effectively do AI tools capture and communicate the overall structure and flow of information?

5. Tone & Style

How effectively do AI tools capture and communicate the tone and style of the input material, and how does this impact the meaning of that material?

In order to be able to assess AI’s outputs in detail, I decided to use one of my own research articles as the input material, which I fed into what are considered to be the three big AI tools for learning:

I prompted each tool in turn to read the article carefully and summarise it, ensuring that it covered all key concepts, ideas etc ensuring that I get a thorough understanding of the article and research.

Results

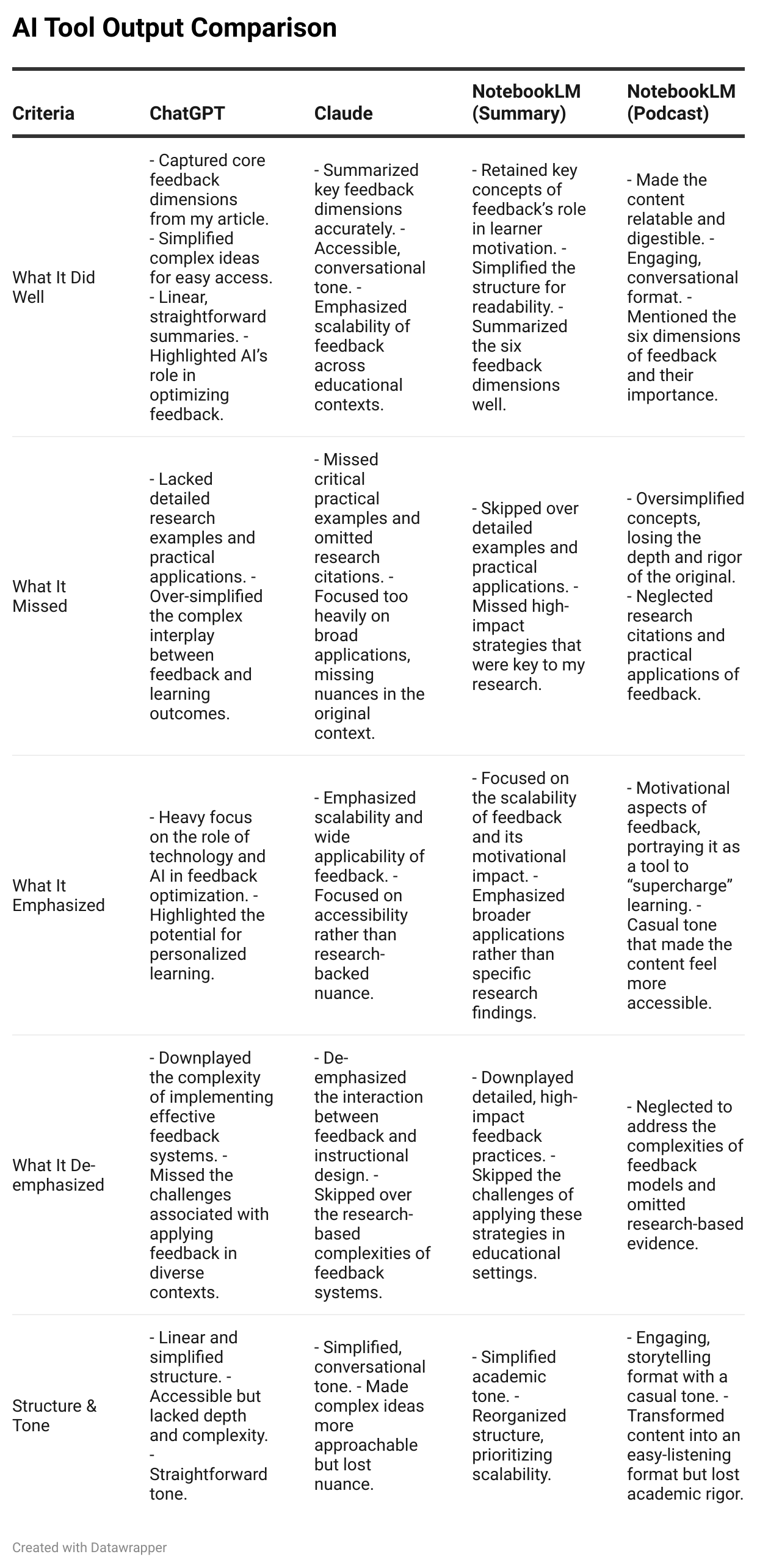

Here’s a summary table showing my findings so far:

One thing that we’re reminded of here is that, while generative AI tools undoubtedly enhance access to information, they also actively “intervene” in the information-sharing process, actively shaping the type and depth of information that we receive, as well as (thanks to changes in format and tone) its meaning.

Here are a few specific observations that I’ve made:

ChatGPT 4o

ChatGPT oversimplifies and sometimes omits critical details—including specific research studies, frameworks and examples. Be sure to supplement it with deeper reading for a more comprehensive understanding.

Claude 3.5

Like ChatGPT, Claude misses important practical examples, frameworks and certain research details. Use it as a starting point, but follow up with targeted probing more detailed material for a fuller picture.

NotebookLM Summary Feature

NotebookLM’s summary feature tends to gloss over practical applications, frameworks and important examples. It’s helpful for a rapid high level review but must be paired with a thorough reading of the original material.

NotebookLM Podcast Feature

In simplifying the content for the podcast format, much of the academic depth and nuance is lost. The change of style and tone makes the content more accessible, but at the same time significantly reduces detail and “flattens” complexity. If you want anything more than a very high level “flavour” of information, don’t rely on this feature.

Reflections So Far

While popular AI tools are helpful for summarising and simplifying information, when we start to dig into the detail of AI’s outputs we’re reminded that these tools are not objective; they actively “intervene” and shape the information that we consume in ways which could be argued to have a problematic impact on “learning”.

Another thing is also clear: tools like ChatGPT4o, Claude & Notebook are not yet comprehensive “learning tools” or “education apps”. To truly support human learning and deliver effective education, AI tools need to do more than provide access to information—they need to support learners intentionally through carefully selected and sequenced pedagogical stages.

In practice, this looks like building AI tools which go beyond information summarisation and align with established and trusted pedagogical research on how humans learn.

To make this more concrete, here are three examples of what a more “pedagogy first” approach to AI tooling might look like in practice:

1. Spaced Repetition

As documented by Cepeda et al. (2006) and others, spaced repetition, is a proven strategy for enabling long-term information retention and understanding by revisiting information at deliberate and carefully defined intervals.

An AI tool for substantive learning might use adaptive algorithms to implement spaced repetition, prompting learners to revisit material at optimal intervals, greatly enhancing retention and improving long-term learning outcomes.

2. Deliberate Recall

Research by Karpicke & Roediger (2008) has shown that active and deliberate recall—i.e. retrieving information from memory—dramatically improves learning retention compared to passive review. AI tools, however, primarily focus on delivering information rather than encouraging active engagement.

Rather than focusing only on delivering information, AI tools should be built to require learner to retrieve key concepts from memory at carefully sequenced intervals, promoting deeper cognitive processing and stronger retention.

3. Complexity & Friction

Simplification and summarisation are useful for “getting started” and increasing the accessibility of information, but research shows that learning requires friction and increasing levels of complexity and challenge. By simplifying material, learners miss out both on critical nuances and on the motivation of challenge, both of which are essential for substantive learning (Mayer, 2011).

AI tools should offer layered explanations, allowing learners to start with basic overviews before delving deeper into more complex concepts and applications. By managing and increasing complexity and challenge, AI tools could better mirror real-world learning, where understanding often unfolds in stages and requires deeper exploration over time.

Material that is too easy can negatively impact motivation & overload cognitive processes that are critical to learning.

(Mayer, 2011)

Closing Thoughts: Redefining the “Learning” Process

It’s clear that AI tools like ChatGPT, Claude, and NotebookLM are incredibly valuable for making complex ideas more accessible; they excel in summarisation and simplification, which opens up access to knowledge and helps learners take the first step in their learning journey. However, these tools are not learning tools in the full sense of the term—at least not yet.

By labelling tools like ChatGPT 4o, Claude 3.5 & NotebookLM as “learning tools” we perpetuate the common misconception that “learning” is a process of disseminating and absorbing information. In reality, the process of learning is a deeply complex cognitive, social, emotional and psychological one, which exists over time and space and which must be designed and delivered with intention.

The TLDR here is that, as useful as popular AI tools are for learners, as things stand they only enable us to take the very first steps on what is a long and complex journey of learning.

AI tools like ChatGPT 4o, Claude 3.5 & NotebookLM can help to give us access to information but (for now at least) the real work of learning remains in our - the humans’ - hands.

Happy experimenting!

Phil 👋

P.S. Want to dive deeper into learning science and pedagogy-first AI? Join me on an upcoming cohort of my AI-Learning Design Bootcamp!