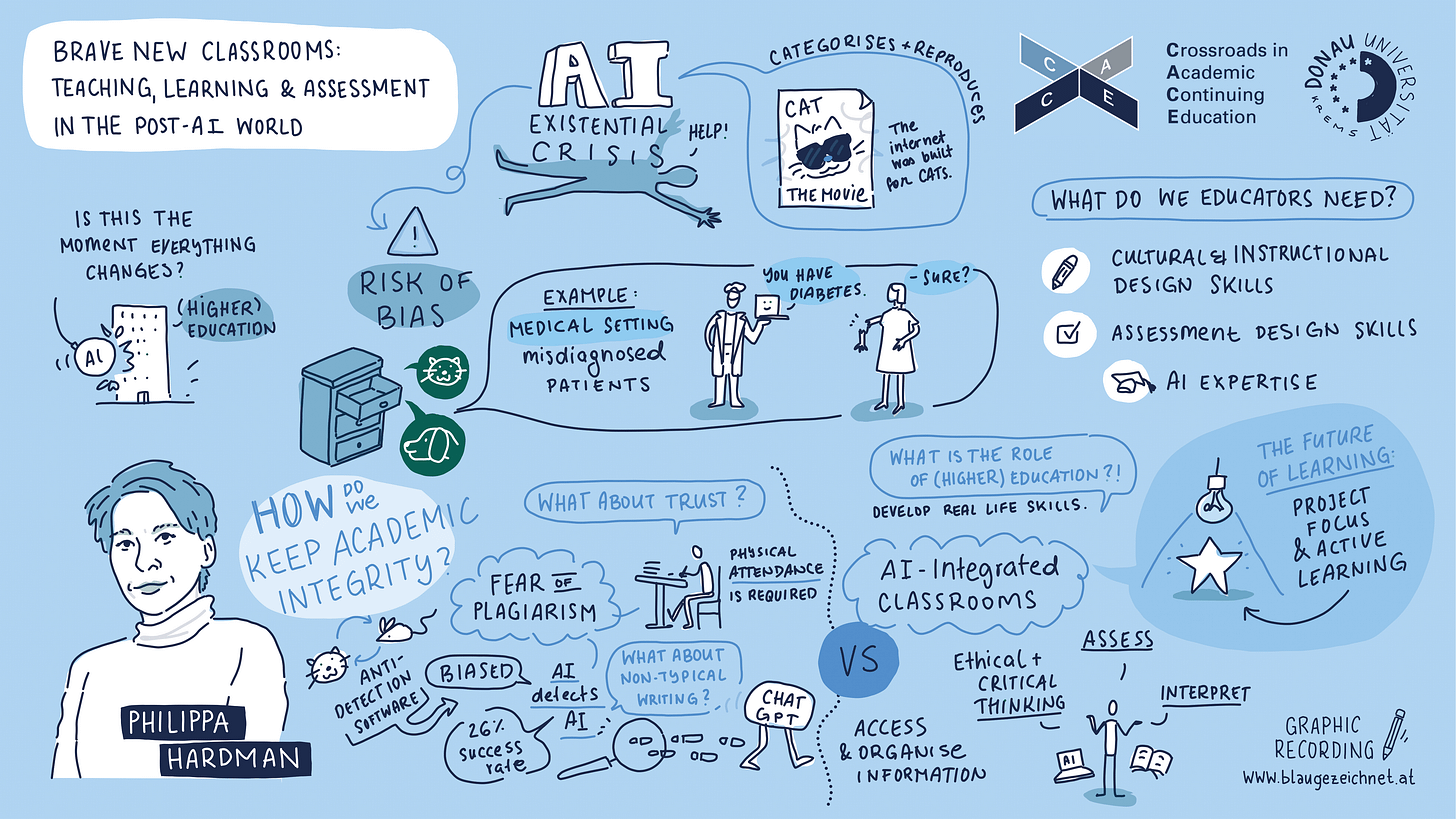

The debate around AI in education often circles back to concerns about academic integrity. If tools like ChatGPT can write an essay better than the average student where does this leave educators, education institutions and the concept of academic integrity?

Academic integrity: “The expectation that teachers, students, researchers and all members of the academic community act with: honesty, trust, fairness, respect and responsibility.”

This is a question that I have explored a lot with colleagues in universities across the world in the last few months. In every case, I have shared a spiky point of view - it goes goes like this:

AI in education is primarily seen as an existential threat to academic integrity. If AI can write an essay better than the average student, we are doomed.

Many institutions have responded by increasing levels of student monitoring: think in-person essay writing and/or monitoring students at scale through the use of AI detection technologies like GPTZero and TurnitIn.

But, by increasing monitoring and by investing in AI detection technologies which have proved to be both discriminatory and ineffective, we actively erode and undermine - rather than build and reinforce - trust and academic integrity.

So, what’s the alternative?

AI: a Tool for Academic Integrity?

If we embrace rather than monitor the use of AI in education, can we enhance (rather than erode) academic integrity? I think the answer is yes.

Here are a couple of examples:

What if…. we shift the focus of education from knowledge recall to the development of critical thinking, analytical skills, and problem-solving abilities?

In this scenario, AI becomes a tool which is used in exactly the same way as textbooks or research papers: it’ used by students to gather information, inspire creativity, and organise thoughts. The role of educators shifts to supporting and assessing students in their ability to interpreting, critiquing, and applying the knowledge gained, rather than just memorising and regurgitating facts.

What if…. instead of traditional exams that often encourage rote learning, we move towards continuous, project-based assessments?

In this scenario, continuous behavioural assessments would evaluate a student's ability to research, synthesise information, and present well-reasoned arguments. This approach not only provides a more inclusive and holistic view of a student's skills and knowledge but also arguably aligns more closely with the real-world challenges they will face in the real world.

The question I’d like us all to ask is: does the integration of AI into education present a crisis for academic integrity, or an opportunity to enhance it?

By requiring a shift from traditional, memorisation-focused teaching, learning and assessment methods to a model that emphasises critical thinking, analytical skills, and problem-solving, AI presents an immediate challenge to academic integrity as we know it, but also an opportunity not just to increase academic integrity but also improve student outcomes.

For example:

Promoting Honest and Responsible Use of Information: By using AI as just another resource, akin to textbooks or research papers, students are encouraged to engage with information honestly and responsibly. AI can aid in gathering information and organizing thoughts, but the emphasis is on the student's ability to critically interpret, analyze, and apply this information. This fosters an environment where students learn to use AI ethically, enhancing the values of honesty and responsibility.

Building Trust Through Inclusive Assessment Methods: Shifting from traditional exams to continuous, project-based assessments helps create a more inclusive and fair evaluation system. This approach recognises diverse learning styles and backgrounds, thus maintaining fairness and equity in the educational process. By moving away from high-pressure, one-size-fits-all exams, educators show trust in their students' abilities to demonstrate knowledge and skills in various ways, reinforcing the principle of trust.

Enhancing Responsibility in Learning: Encouraging, rather than repressing, the use of AI in education instills a sense of responsibility in students to use technology ethically and effectively. It prepares them for a future where AI is an integral part of the professional landscape, teaching them to be responsible users and creators of AI-generated content.

Closing Reflections

Rather than defaulting to the view that the use of AI constitutes plagiarism and increasing student monitoring, we must have an open and honest conversation about the real impact of AI on academic integrity.

As educators and academic institutions, will we opt to protect academic integrity as we know it and position AI as an existential threat to it?

Or will shift into a period of “post-plagiarism” and embrace AI as an unprecedented opportunity to deepen our commitment to honesty, trust, fairness, respect and responsibility?

Only time will tell.

Happy innovating!

Phil 👋

P. S. If you want some hands-on experience with AI in a safe and supported environment, join me and other educators and learning professionals on an upcoming cohort of my AI-Powered Learning Science Bootcamp.