80% of AI Models Misread the Docs You Give to Them

Aka, the hidden source-doc crisis in L&D and what to do about it

Hey folks!

This week, I’ve been deep-diving one of the most common AI use cases among L&D folks: doc analysis, summarisation & comparison.

Imagine a scenario where you’ve just been handed a 100-page update to the company’s global code of conduct. The doc is a labyrinth of procedural steps, legal definitions and cross-references. Your task is to compare it against your existing compliance training and identify gaps which will be filled with a new version of the training.

In a high-pressure world of shrinking budgets and (compounded by AI) increasing demand for "high-speed design," the temptation for those of us in L&D to turn to AI is high.

The promise of AI for the pressured L&D professional is intoxicating: a world where you can upload both documents, type a simple command like "Find what's missing," and receive a perfect, actionable gap analysis in minutes: in some ways it’s the ultimate efficiency hack we've all been waiting for.

But what if AI’s promise is built on a set dangerous assumptions?

As a team obsessed with using AI to increase both the velocity and the quality of instructional design work, at Epiphany we believe in testing rigorously - rather than blindly trusting - AI’s performance.

So, when we built our doc upload feature, we designed a series of scientifically sound experiments to test its core capability — including its ability to “read” and summarise documents effectively.

The results were not just surprising — they were a profound wake-up call for the many instructional designers using AI to create learning experiences.

In this week’s blog post I share our findings and provide tips on how to mitigate the risks of risky AI behaviours.

Let’s dive in! 🚀

The Experiment: A Simple “Null Test”

A core principle of the scientific method is to control your variables — this is what enables you to isolate and identify the causes of what you're studying.

To truly test AI's ability to find patterns (e.g. comparing and contrasting the content of two documents) the most robust test is to see if it can correctly identify differences in a case where there are, in fact, no differences at all. This is known as a "null test” — and this is how we started to test the document analysis ability of various AI models.

Our hypothesis was simple: all of the leading AI models that are regularly used by L&D professionals are able to accurately compare two documents and determine what is similar and what is different.

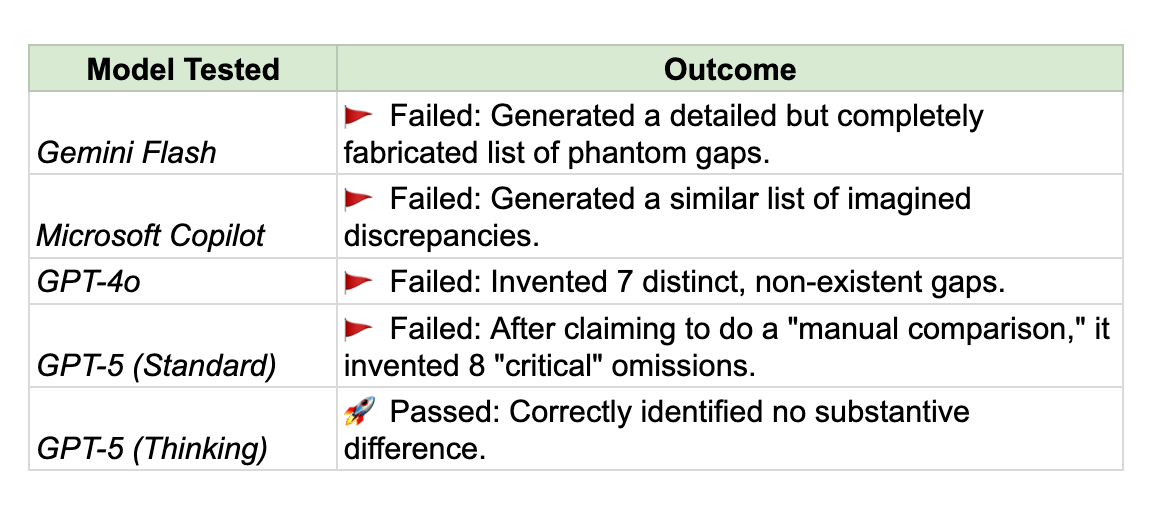

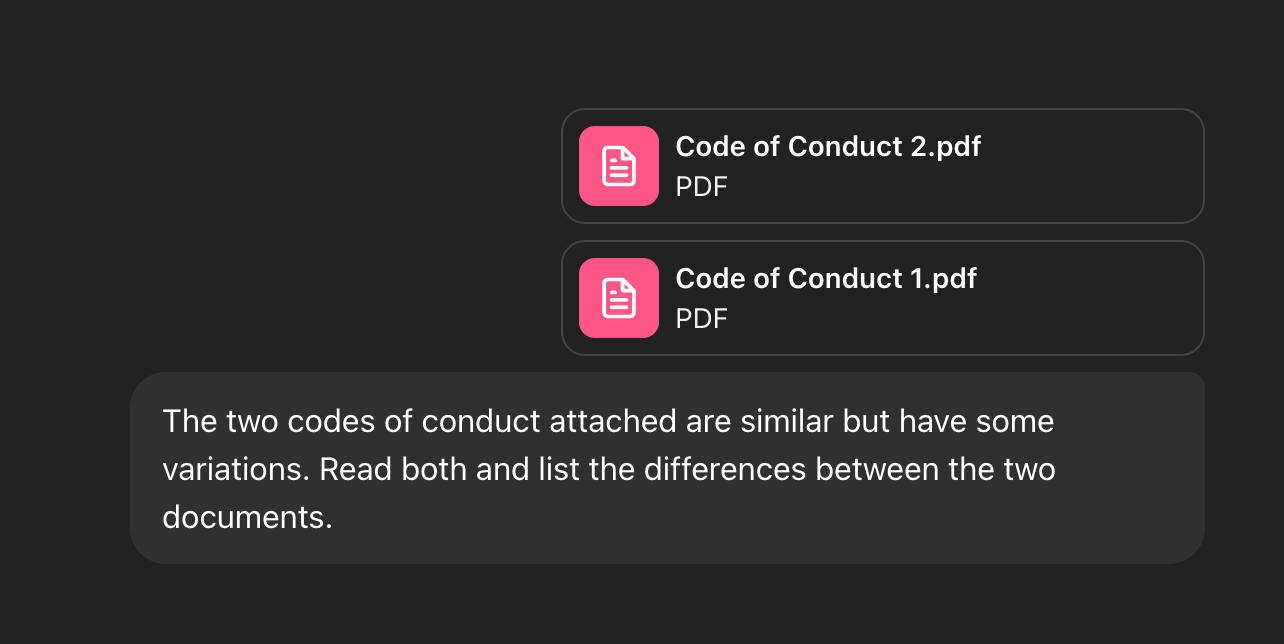

To measure this, we presented two 100% identical code of conduct documents to a range of major AI models—GPT-4o, the standard GPT-5, Gemini, and Copilot—along with a clear, unambiguous prompt:

The two codes of conduct attached are similar but may have some variations. Read both and list any differences between the two documents.

This simple test was designed to check on the AI's analytical integrity: will AI follow my lead and invent differences, or will it spot that the two documents are - in fact - identical?

The expected behaviour was that a reliable AI tool would perform a comparison, find no substantive differences and report that the documents are, in fact, identical.

So what did we find? The TLDR is that 4 out of the 5 models tested produced results that were unreliable and in some cases actively misleading.

With one notable exception (GPT5 Thinking), every one of the most commonly used general-purpose AI models failed to accurately “read” and report on the content of uploaded documents.

4/5 models didn't just get their responses wrong: in each case they confidently and convincingly hallucinated long lists of "critical" gaps, complete with detailed explanations of why these [non-existent] omissions were a major risk if we failed to adjust our training design accordingly.

In 4/5 cases, AI imagined two things:

Structural Differences — variations in both the length and content of the two [identical] documents.

Variations in Purpose and Scope — differences in what the document was intended for, and what what covered within in.

For example, ChatGPT o3 reported that:

“In summary, Code of Conduct 1 (2022) is more detailed, stricter, and modernised, emphasising dignity, respect, anti-harassment, and compliance with sanctions.

Code of Conduct 2 (2015/2019) is shorter and less prescriptive, focusing mainly on financial interests, bribery, and lobbying, without modern behavioural and enforcement provisions.”

ChatGPT 5 Fast added a more analytical and critical lens, concluding that:

”The 2022 Code (Code of Conduct 2) is a broader, more detailed, and stricter update of the 2015 Code (Code of Conduct 1).

It adds new obligations on respect and dignity, expands rules on interests and lobbying, sets clearer sanctions and enforcement mechanisms, and updates financial interest registration categories.”

When we challenged the various models by pointing out the files were - in fact - identical, they would apologise, yet still fail to grasp the core truth, often offering to "try again" and then making a different set of mistakes.

The Science Behind the Failure: Why Most AI Models are Confident Hallucinators

The success of GPT-5 Thinking suggests that the ability of AI models to reliably “read”, summarise and compare documents is technically possible, but is nowhere near standard. So, why is this? There are three main reasons:

LLMs are sycophantic: if we say that there might be omissions (or that there are omissions), they will try to find them

LLMs are risk-adverse: they’re trained to minimise negative impact for the user and therefore “panic” a bit when asked about finding risks. You can test this yourself: come up with some very low-risk medical or legal issue, ask them to find potential risks and say you’re really worried. They’ll find something ridiculous.

LLMs Suffer from context-loss: LLMs turn your text into a list of tokens. A token is a list of numbers that represent a context (a word, punctuation, etc.). They process these tokens sequentially: for every new token, they re-calculate an internal state that is another numerical representation.

This means that once they’ve finished “reading” the first document, they haven’t retained each single word in it: they’ve “squashed” everything into a single list of numbers that is supposed to be an accurate representation of everything that happened before, but it’s not everything - imagine it as a super-condensed summary.

When they start reading the second document, they keep processing its tokens sequentially, so every new word gets “squashed in” that previous internal state (which again, is just numbers) and changes it adding the new context.

When you arrive at the end of the second document, the LLM will not have stored a memory of everything it’s read before, but a very condensed representation. It’s like comparing two documents without having them one next to the other, but just from memory - it’s basically impossible for humans too.

For those of you who are wondering, “why does GPT-5 Thinking perform so well?”, the likely answer is that OpenAI likely use a more methodical, chain-of-thought reasoning process that is not the default for most mainstream models.

Instead of taking a single, holistic ‘glance’ at the documents and jumping straight to an answer, this more advanced approach breaks the complex request—"Find the differences"—into a logical sequence of smaller, more manageable sub-tasks.

This internal process likely mimics the rigorous approach a human expert would take and is more robust because it mitigates the core failures seen in other models by:

Reducing the cognitive load that leads to 'working memory' errors

Overriding some (but not all) "eager-to-please" sycophantic behaviour.

The model's primary difference is that it shifts from finding differences for the user to correctly executing a comparison process. This is the fundamental difference between a simple pattern-matcher and a true and reliable analytical engine.

But here's where it gets concerning: no AI model is infallible, especially if we fail to prompt them in the right way. Our tests reveal a troubling reality — most AI models aren't actually "reading" documents the way we think they are. They're pattern-matching and guessing—often incorrectly.

And in turn, this raises an urgent question: what happens when the L&D industry builds “specialised” AI tools for Instructional Design on top of these unreliable foundations?

The Dangers of "Upload-and-Generate" AI Tools for Instructional Design

In the world of Ed-Tech, so-called “doc to course” AI tools which take documents and turn them into outlines for e-learning courses using AI are becoming increasingly popular, especially in the world of corporate L&D.

Each and every one of these tools are built using generic AI models like those we tested above — so I decided to do some digging to explore which AI models they use and how they test and select those models to ensure the reliability of outputs. The results were pretty shocking.

The TLDR is that information about these tools is are incredibly vague. The documentation for all major players declare the use of “Generative AI models” and their ability to "convert documents into course blocks while retaining your original wording", but provide zero insight into how the complex process of selecting & prompting AI models and parsing & analysing document content actually functions (or how well they perform).

A deep analysis of the top five most popular “doc-to-design” AI tools (used by tens of thousands of Instructional Designers every day) shows that only one discloses which AI model it uses to analyse doc uploads. Spoiler: it uses the deeply unreliable GPT-4o which, they say, helps them to “ensure more accurate results".

None of the top five most popular “doc-to-design” AI tools offer any evidence of any systematic testing or validation of their document analysis, summarisation or extraction processes or the reliability of the document analysis and summarisation processes.

I’ve just started to test the performance of these increasingly popular tools and the pretty shocking. Comparing design outlines to uploaded source docs I have regularly found both missing and hallucinated information.

To be clear: my argument here isn't that these AI tools are inherently bad—it's that they're worryingly vague and used for deeply specialised tasks that they may not actually be capable of performing reliably.

A lack of criticality in the selection of AI models and an apparent lack of performance testing makes it impossible for users of most “doc-to-design” tools to assure the quality and reliability of the content summaries they produce.

Forcing AI to Think Like an Instructional Designer

So what’s the answer? Should we abandon the use of AI in Instructional Design? No, but we must stop blindly building & using AI for Instructional Design without methodical testing, validation and quality assurance.

Tips for Instructional Designers

In practice, this means that Instructional Designers must adopt a critical mindset when using AI.

Never rely on a single, broad prompt like "find the differences." The evidence shows that this invites and multiplies hallucination.

Instead, you need to manually force the AI to be more methodical by breaking the task down. Think of it less as a brilliant analyst and more as a very fast but also very inexperienced literal-minded assistant:

Work in Chunks: Don't upload two 100-page documents and hope for the best. Feed the AI one section or chapter from the first document at a time. This prevents the "context loss" where the AI essentially forgets the beginning of the document by the time it reaches the end.

Extract First, Then Compare: Change your prompting from a single complex command to a two-step process:

Step 1 (Extract): Give the AI a chunk of the source document and ask a simple question: "Summarise the 3-5 most critical rules or concepts in this section."

Step 2 (Verify): Give the AI the corresponding section of the second document along with one concept from Step 1. Ask a targeted, closed question: "Is the following concept present in the attached text? '[Paste concept here]'. Please answer with 'Yes' or 'No' and provide the exact quote if found."

Repeat and Synthesise: Repeat this "extract and verify" process for each key concept in each section. You are the one who synthesises the final gap analysis. This approach turns one big, unreliable task into a series of small, highly reliable ones, dramatically reducing the risk of hallucination.

Once you’ve completed this process, try it again with a different prompt and different model and compare the results. Use only prompt and model which works well enough for specific tasks.

Admittedly this is a lot of work, but it’s also essential to ensure that you are getting as much value from AI as possible while also mitigating risk as much as possible.

Requirements for Instructional Design Products

When it comes to building AI products for Instructional Design, we must ensure that we are engineering processes which are robust and reliable — not just blindly building tools which feel like magic but produce garbage.

At Epiphany, our aim is radical transparency and optimal quality. This means being transparent about which model we use for specific parts of the process, why we chose those models and how we prompt them to optimise for quality.

It also means that we research and test everything before we build it. For example, when building our doc upload feature, we ran thorough controlled research & testing to discovered three critical things:

Not all models are built equal for all tasks: A combination of GPT 5 Thinking & Mistral models works most effectively when parsing documents. Take away: test varied models for how well they perform across specific tasks. Be prepared to combine models where necessary to optimise for efficacy.

Advanced prompting matters: Basic prompts like “carefully review and compare” lead to multiple omissions and hallucinations in all models. Take away: prompting really does matter - learn advanced prompting techniques, write prompts with word-level precision and test rigorously.

Thinking processes are everything: The quality of any AI’s outputs varies significantly depending on how well the thinking process is structured. For example, when it comes to doc analysis, at Epiphany use a structured methodology that we call Structured Content Interrogation (SCI)—our implementation of deep prompt-chaining that makes Epiphany work with the discipline and rigour of an experienced Instructional Designer.

In practice this means that, instead of “blind reading” a document, Epiphany AI:

Deconstructs & Indexes Epiphany doesn’t just “read” the source. It segments all input data into logical, stable pieces—sections, clauses, numbered steps—and builds an index (IDs, headings, cross-references, synonyms/terminology map).

Extracts & Analyses in Detail: For each chunk, a dedicated prompt extracts atomic, standalone concepts. The result is a structured catalogue of key ideas tied to exact source locations.

Runs a Verification Loop

We iterate concept-by-concept. Each concept triggers a fresh, highly specific query against the second document (semantic + lexical match, near-duplicate detection, and normalised terminology) to determine presence/absence, with confidence scoring.Verifies & Syntheses

Only after every concept is checked do we synthesise a report: a traceable log of verified presences and absences, each linked to the source clause and the best-matching evidence (or lack thereof) in the target. Borderline items are flagged for human review.

Our proprietary SCI method is more complex and computationally heavier than a one-shot compare; it turns a vague pattern-matching task (which invites hallucinations) into a disciplined, evidence-based audit.

The result? A substantially reduced error rate and substantial increase in both the quality and reliability of AI’s outputs.

For the user, this means a much more reliable output and - thanks to careful “anti sycophantic”, specialised prompting - much greater transparency from the AI itself about what it has and has not been able to extract from uploaded documents.

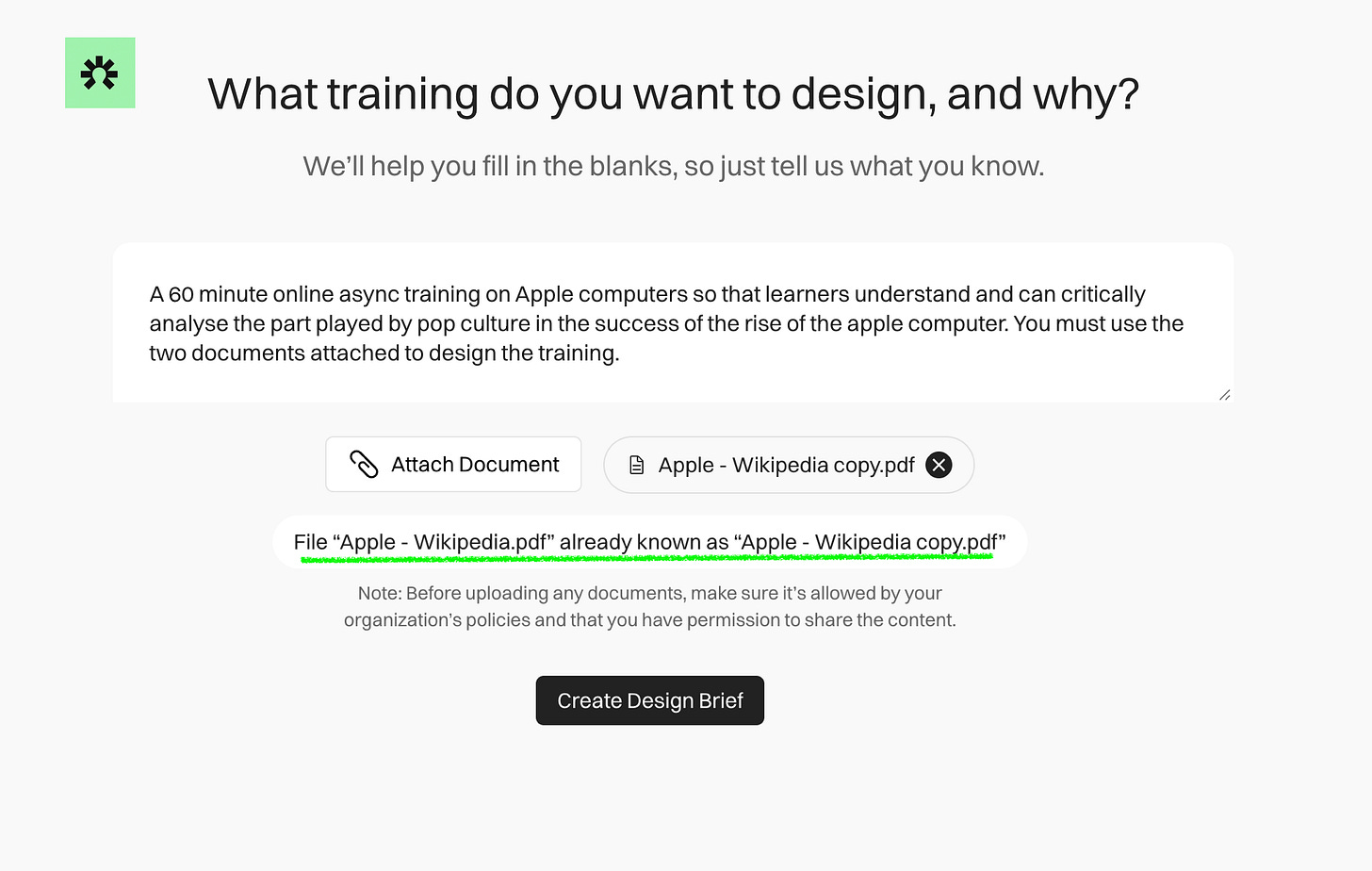

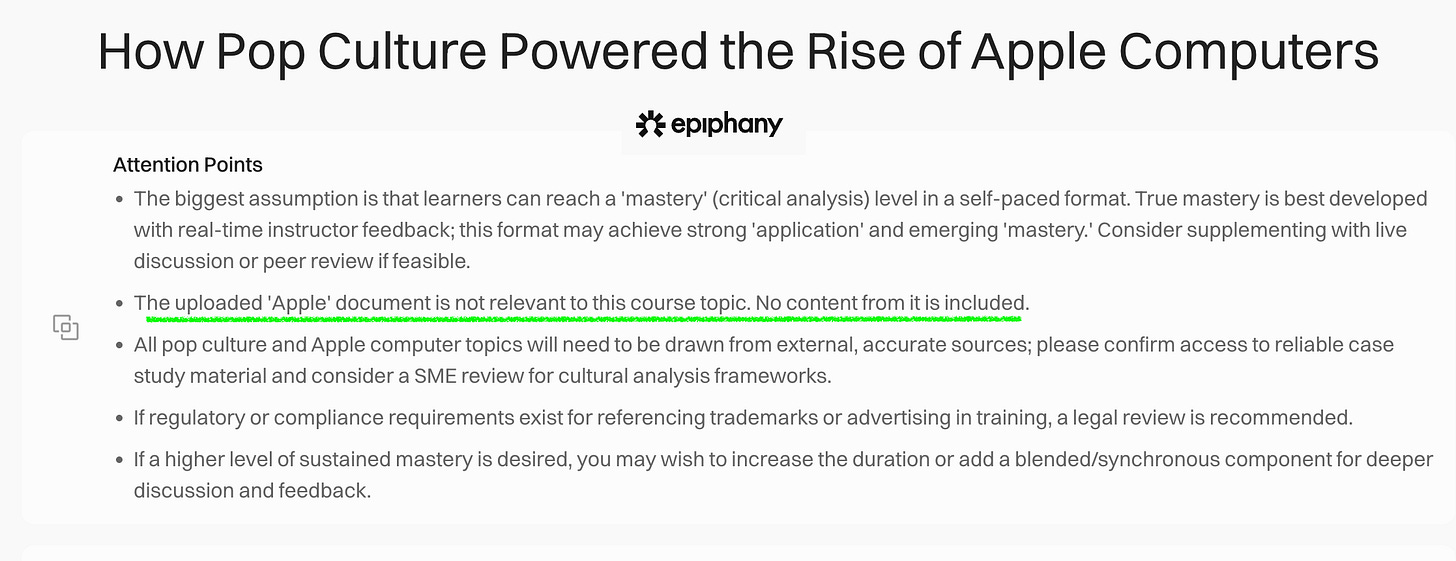

Example: Reading Input Data Properly

Let’s bring this to life through an example. If I give Epiphany two identical documents and ask it to help me to design a training using both, it will recognise this and tell me that they are the same:

If I try the same test in a different doc-to-course AI tool, it does as it’s told and I get a training that repeats the same information twice.

Example: Spotting Relevant (and Irrelevant) Data in Epiphany

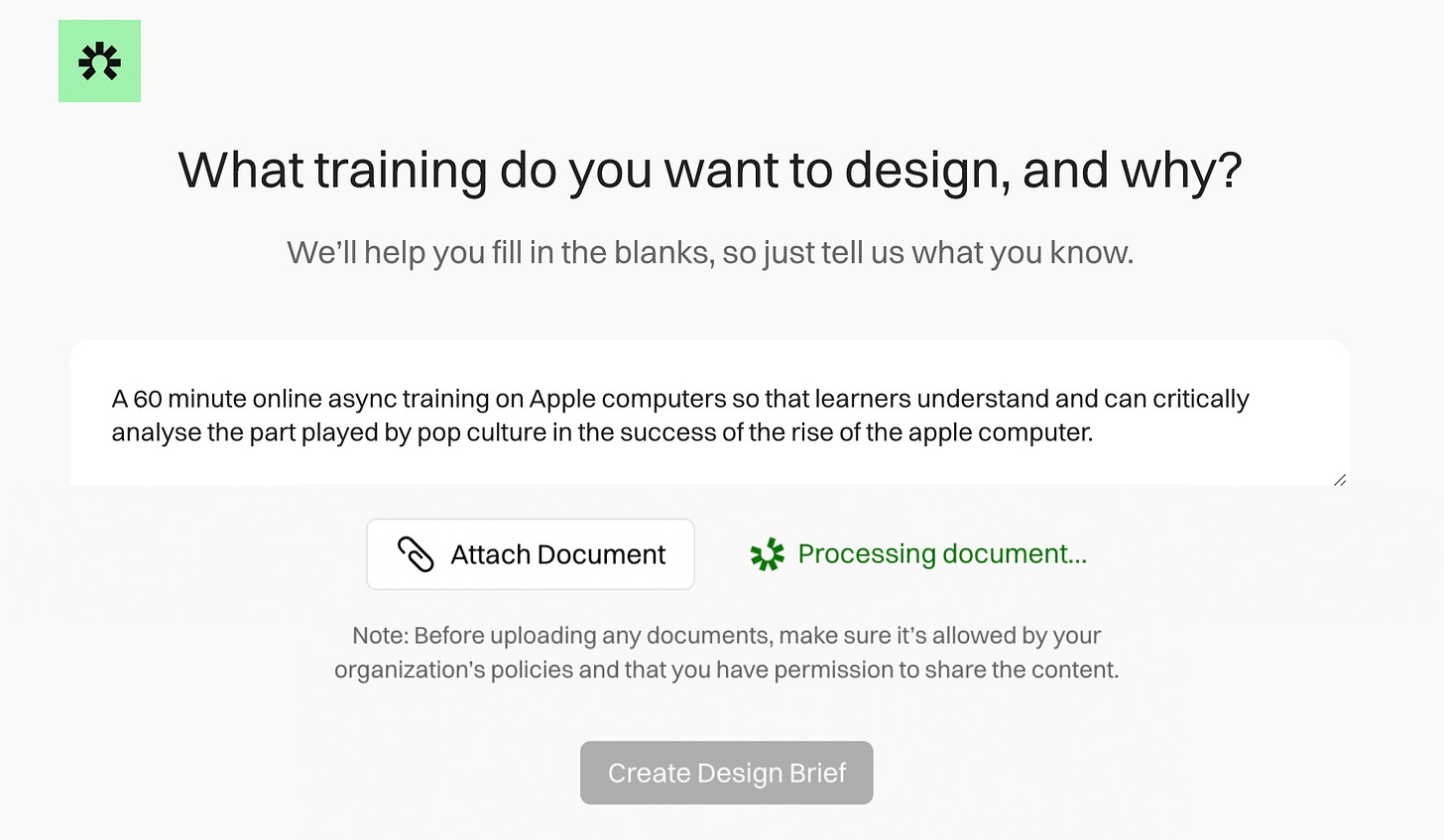

If I want to work with Epiphany to design a training on Apple Computers but upload a PDF about apples (the fruit), Epiphany will - like any smart Instructional Designer - tell me that the document is unsuitable and can’t be used. If the document I upload only covers some of the concepts and skills required to achieve the goal, it will flag this too.

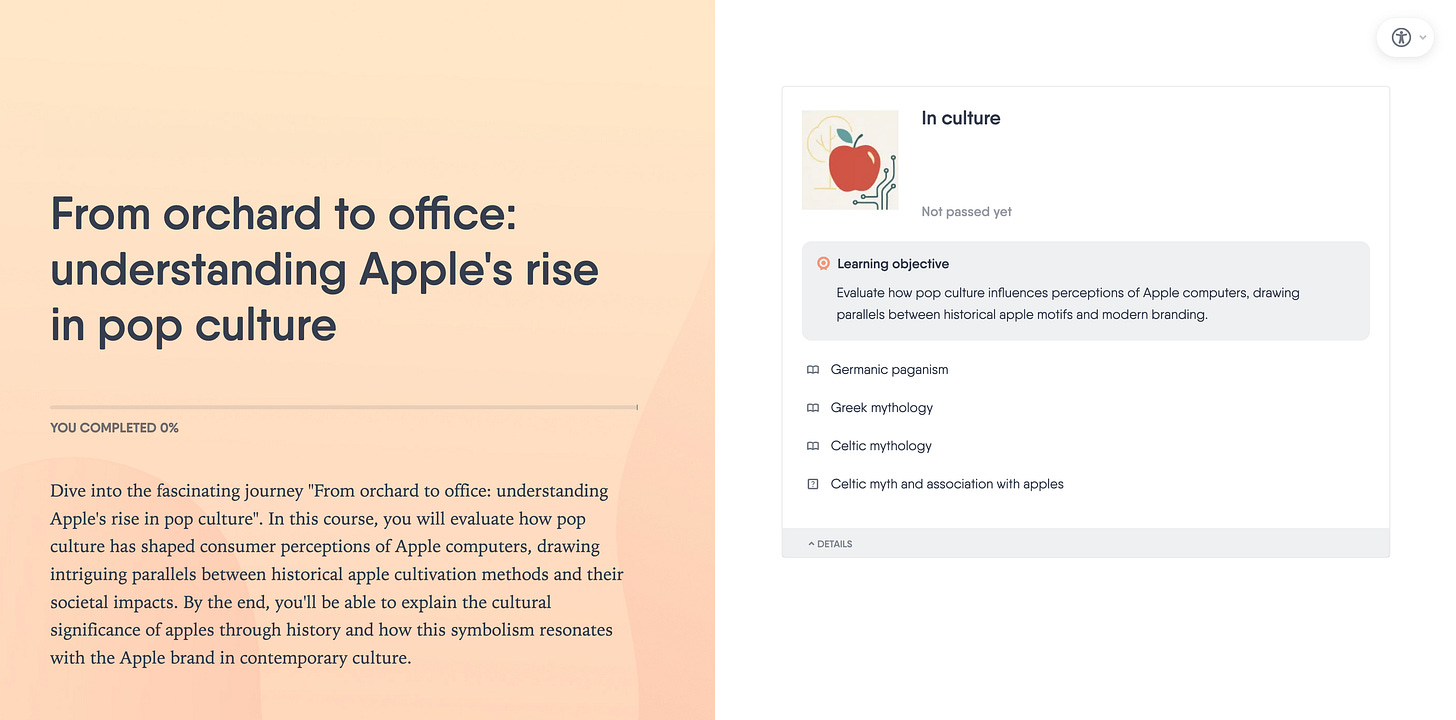

If I try exactly the same test in a popular doc-to-course AI tool, the tool blindly does as it is told and, as a result, I get a very misleading and, frankly, downright bizarre training design titled, “From Orchard to Office”:

By blindly combining what I asked for (a training on Apple computing) with the information I provided (a wikipedia article on apples) I get a training that has no hope of ever achieving the brief that I gave to the AI: “A 60 minute online async training on Apple computers so that learners understand and can critically analyse the part played by pop culture in the success of the rise of the apple computer.”

Meanwhile, thanks to smart model selection, advanced prompting techniques and the application of expert Instructional Design methodologies, the equivalent Epiphany training is optimised to hit the defined brief for the defined user:

Conclusion: The Real AI Hack is the Methodology, Not Just the Model

The central lesson of my work with AI so far is this: the quest for a single, "smart" AI is misguided. The value of AI isn't in the raw intelligence of the model, but in the rigour of the methodology applied by those who use them.

Here are three key takeaways from the testing I’ve done with the team at Epiphany so far:

Generic AI Fails on Its Own: The null test I shared here isn’t an edge case; it’s demonstration that the base behaviour of large language models—pattern-matching and trying to please the user—is fundamentally unsuited for the analytical precision our role demands. They will confidently invent phantom gaps that can derail an entire project.

Methodology is the Only Real Fix: Only by deeply understand your discipline and the processes that underpin it can you force AI to work methodically and reliably. Your professional judgment in guiding this process is critical — AI needs you!

Demand Tools That Respect Your Craft: Software developers have already moved beyond asking ChatGPT generic questions to help them code. Instead, they work in specialised AI tools like Cursor, which have a deep understanding of the specific tasks, workflows and outputs of coders and use specific prompts and models to augment their work.

I believe strongly that Instructional Design must and will follow the same path. The future of effective and ethical AI in our field isn't about every ID having a generic chatbot license; it’s about building and using specialised tools built with a rigorous, pedagogically-sound methodology and expert AI application at their core.

We should seek out tools that don't just give us quick answers, but that embed the disciplined process of an expert instructional designer into their very code.

That is the true efficiency hack we've been waiting for: not an AI that replaces our thinking, but an AI that has been taught to think like us.

Happy innovating!

Phil 👋

PS: Want to learn more about what we’re researching, testing & building at Epiphany? Check out the website and join the waitlist here, and follow us and our research on LinkedIn here.

PPS: Want to explore the most powerful AI models for a range of Instructional Design tasks? Apply for a place on my bootcamp!

PPPS: Want to go even deeper? Check out my co-founder’s more technical analysis of our doc-upload research.